Fermilab's new August 10, 2023 paper and its abstract described it latest improved measurement of muon g-2:

The new paper doesn't delve in depth into the theoretical prediction issues even to the level addressed in today's live streamed presentation. It says only:

A comprehensive prediction for the Standard Model value of the muon magnetic anomaly was compiled most recently by the Muon g−2 Theory Initiative in 2020[20], using results from[21–31]. The leading order hadronic contribution, known as hadronic vacuum polarization (HVP)was taken from e+e−→hadrons cross section measurements performed by multiple experiments. However, a recent lattice calculation of HVP by the BMW collaboration[30] shows significant tension with the e+e− data. Also, a new preliminary measurement of the e+e−→π+π−cross section from the CMD-3 experiment[32] disagrees significantly with all other e+e−data. There are ongoing efforts to clarify the current theoretical situation[33].

While a comparison between the Fermilab result from Run-1/2/3 presented here, aµ(FNAL),and the 2020 prediction yields a discrepancy of 5.0σ, an updated prediction considering all available data will likely yield a smaller and less significant discrepancy.

This is 5 sigma from the partially data based 2020 White Paper's Standard Model prediction, but much closer to (consistent at the 2 sigma level with) the 2020 BMW Lattice QCD based prediction (which is 1.8 sigma from the experimental result and has been corroborated by essentially all other partial Lattice QCD calculations since the last announcement) and to a prediction made using a subset of the data in the partially data based prediction which is closest to the experimental result (which is even closer to the experimental result).

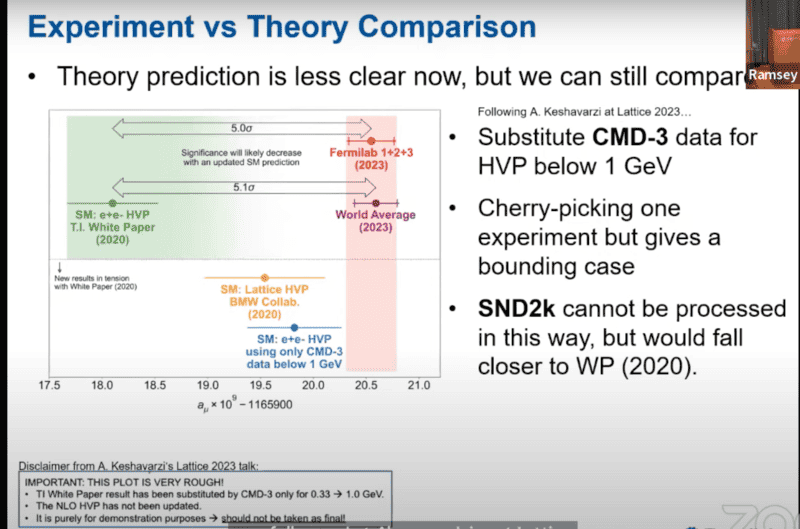

This is shown in the YouTube screen shot from their presentation this morning (below):

As the screenshot makes visually very clear, there is now much more uncertainty in the theoretically calculated Standard Model predicted value of muon g-2 than there is in the experimental measurement itself.

For those of you who aren't visual learners:

World Experimental Average (2023): 116,592,059(22)

Fermilab Run 1+2+3 data (2023): 116,592,055(24)

Fermilab Run 2+3 data(2023): 116,592,057(25)

Combined measurement (2021): 116,592,061(41)

Fermilab Run 1 data (2021): 116,592,040(54)

Brookhaven's E821 (2006): 116,592,089(63)

Theory Initiative calculation: 116,591,810(43)

It is important to note that every single experiment and every single theoretical prediction matches up exactly 116,592 times 10^-8, rounded to the nearest 10^-8 (the raw number before conversion to g-2 form is 2.00233184, which has nine significant digits). The spread from the highest best fit experimental value to the lowest best fit theoretical prediction spans only 279 times 10^-11, which is equivalent to a plus or minus two sigma uncertainty of 70 times 10^-11 from the midpoint of that range. So, all of the experimental and theoretical values are ultra-precise.

The experimental value is already twice as precise at the theoretical prediction of its value in the Standard Model, and is expected to ultimately be about four times more precise than the current best available theoretical predictions as illustrated below.

Completed Runs 4 and 5 and in progress Run 6 are anticipated to reduce the uncertainty in the experimental measurement over the next two or three years, by about 50%, but mostly from Run 4 which should release its results sometime around October of 2024. The additional experimental precision anticipated from Run 5 and Run 6 is expected to be pretty modest.It is likely that the true uncertainty in the 2020 White Paper result is too low, quite possibly because of understated systemic error in some of the underlying data upon which it relies from electron-positron collisions. The introduction to the CMD-3 paper also identifies a problem with the quality of the data that the Theory Initiative is relying upon: The π+π−channel gives the major part of the hadronic contribution to the muon anomaly, 506.0±3.4×10−10 out of the total aHVP µ = 693.1±4.0×10−10 value. It also determines (together with the light-by-light contribution) the overall uncertainty ∆aµ= ±4.3×10−10 of the standard model prediction of muon g−2 [5].

To conform to the ultimate target precision of the ongoing Fermilab experiment [16,17]∆aexp µ [E989]≈±1.6×10−10 and the future J-PARC muon g-2/EDM experiment[18],the π+π− production cross section needs to be known with the relative overall systematic uncertainty about 0.2%.

Several sub-percent precision measurements of the e+e−→π+π− cross section exist. The energy scan measurements were performed at VEPP-2M collider by the CMD-2 experiment (with the systematic precision of 0.6–0.8%)[19,20,21,22] and by the SND experiment (1.3%)[23]. These results have some what limited statistical precision. There are also measurements based on the initial-state radiation(ISR) technique by KLOE(0.8%)[24,25, 26,27], BABAR(0.5%)[28] and BES-III (0.9%)[29]. Due to the high luminosities of these e+e−factories, the accuracy of the results from the experiments are less limited by statistics, meanwhile they are not fully consistent with each other within the quoted systematic uncertainties.

One of the main goals of the CMD-3 and SND experiments at the newVEPP-2000 e+e− collider at BINP, Novosibirsk, is to perform the new high precision high statistics measurement of the e+e−→π+π−cross section.

Recently, the first SND result based on about 10% of the collected statistics was presented with a systematic uncertainty of about 0.8%[30].

In short, there is no reason to doubt that the Fermilab measurement of muon g-2 is every bit as solid as claimed, but the various calculations of the predicted Standard Model value of the QCD part of muon g-2 varies are in strong tension with each other.

It appears the the correct Standard Model prediction calculation is closer to the experimental result than the 2020 White Paper calculation (which mixed lattice QCD for parts of the calculation and experimental data in lieu of QCD calculations for other parts of the calculation), although the exact source of the issue is only starting to be pinned down.

Side Point: The Hadronic Light By Light Calculation

The hadronic QCD component is the sum of two parts, the hadronic vacuum polarization (HVP) and the hadronic light by light (HLbL) components. In the Theory Initiative analysis the QCD amount is 6937(44) which is broken out as HVP = 6845(40), which is a 0.6% relative error and HLbL = 98(18), which is a 20% relative error.

The presentation doesn't note it, but there was also an adjustment bringing the result closer to the experimental result in the hadronic light-by-light calculation (which is the smaller of two QCD contributions to the total value of muon g-2 and wasn't included in the BMW calculation) which was announced on the same day as the previous data announcement. The new calculation of the hadronic light by light contribution to the muon g-2 calculation increases the contribution from that component from 92(18) x 10[sup]-11[/sup] to 106.8(14.7) x 10[sup]-11[/sup].

As the precision of the measurements and the calculations of the Standard Model Prediction improves, a 14.8 x 10[sup]-11[/sup] discrepancy in the hadronic light by light portion of the calculation becomes more material.

Why Care?

Muon g-2 is an experimental observable which implicates all three Standard Model forces that serves as a global test of the consistency of the Standard Model with experiment.

If there really were a five sigma discrepancy between the Standard Model prediction and the experimental result, this would imply new physics at fairly modest energies that could probably be reached at next generation colliders (since muon g-2 is an observable that is more sensitive to low energy new physics than high energy new physics).

On the other hand, if the Standard Model prediction and the experimental result are actually consistent with each other, then low energy new non-gravitational physics are strongly disfavored at foreseeable new high energy physics experiments, except in very specific ways that cancel out in a muon g-2 calculation.

Blog Format Only Editorial Commentary:

I have no serious doubt that when the dust settles, tweaks to hadronic vacuum polarization and hadronic light-by-light calculations will cause the theoretical prediction of the Standard Model expected value of muon g-2 to be consistent with the experimentally measured value, a measurement which might get as precise as 7 times 10^-11.

When, and not if, this happens, non-gravitational new physics that can contribute via any Standard Model force to muon g-2 at energies well in excess of the electroweak scale (ca. 246 GeV) will be almost entirely precluded on a global basis. Indeed, it will probably be a pretty tight constraint even up to tens of TeVs energies.

There will always be room for tiny "God of the gaps" modifications to the Standard Model in places in the parameter space of the model where the happenstance of how our experiments are designed leave little loopholes, but no will be supported by positive evidence and none will be well motivated.

The Standard Model isn't quite a completely solved problem.

Many of its parameters, particularly the quark masses and the neutrino physics parameters need to be measured more precisely. We still don't really understand the mechanism for neutrino mass, although I very much doubt that either Majorana mass or a see-saw mechanism, the two leading proposals to explain it, are right. We still haven't confirmed the Standard Model predictions of glue balls or sphalerons. We still don't have a good solid, predictive description of why we have the scalar mesons and axial vector mesons that we do, nor to we really understand their inner structure. There is plenty of room for improvement in how we do QCD calculations. Most of the free parameters of the Standard Model can probably be derived from a much smaller set of free parameters with just a small number of additional rules for determining them, but we haven't cracked that code yet. We are just on the brink of starting to derive the parton distribution functions of the hadrons from first principles, even though we known everything necessary to do so in principle. We still haven't fully derived the properties of atoms and nuclear physics and neutron stars from the first principles of the Standard Model. We still haven't reworked the beta functions of the Standard Model's free parameters to reflect gravity in addition to the Standard Model particles. We still haven't solved the largely theoretical issues arising from the point particle approximation of particles in the Standard Model that string theory sought to address - in part - because we haven't found any experimental data that points to a need to do so.

But let's get real.

What we don't know about Standard Model physics is mostly esoterica with no engineering applications. There is little room for any really significant breakthroughs in high energy physics left. The things we don't know mostly relate to ephemeral particles only created in high energy particle colliders, to the fine details of properties of ghostly neutrinos that barely interact with anything else, to a desire to make physics prettier, and to increased precision in measurements of physical constants that we already mostly know with adequate precision.

Our prospects for coming up with a "Grand Unified Theory" (GUT) of Standard Model physics, or a "Theory of Everything" (TOE), however, which was string theory's siren song for most of my life, look dim. But, while they have aesthetic appeal, a little book full of measurements of things that could probably be worked out from first principles in a GUT or TOE that could fit on a t-shirt, doesn't really change what we can do with the Standard Model.

The biggest missing piece of the laws of Nature, which does require real new physics or a major reimagining of existing laws of physics, is our understanding of phenomena attributed to dark matter and dark energy (and to a lesser extent cosmological inflation).

I am fairly confident that these can be resolved with subtle tweaks to General Relativity (like considering non-perturbative effects or imposing conformal symmetry), and then this classical theory's reformulation as a quantum gravity theory. Searches for dark matter particles will all come up empty. Whether or not Deur is right on that score, the solution to those problems will look a lot like his solution (if not something even more elegant like emergent gravity).

Then, the final "dark era" of physics will be over, and we will have worked out all of the laws of Nature, and thus straight jacketed into a complete set of laws of Nature, we may finally develop a more sensible cosmology as well. Science will go on, but "fundamental physics" will become a solved problem and the universe will look comparatively simple again.

With a little luck, this could all be accomplished, if not in my lifetime, in the lives of my children or grandchildren - less than a century.

Many of these problems can be solved even without much more data, so long as we secure the quantum computing power and AI systems to finally overcome overwhelming calculation barriers in QCD and asymmetric systems in General Relativity. And, the necessary developments in quantum computing and AI are developments that are very likely to actually happen.

We could make a lot of progress, for example, by simply reverse engineering the proton and the neutron and a few other light hadrons, whose properties have been measured with exquisite precision, with improved QCD calculations that are currently too cumbersome for even our most powerful networks of supercomputers to crunch.

The problems of dark matter and dark energy have myriad telescope like devices and powerful computer models generating a torrent of new data and processing it directed at them. Sooner or later, if by no means other than sheer brute force, we ought to be able to solve these problems and I feel like we've made great progress on them just in the last few decades.

No comments:

Post a Comment