I would lay down my life for two brothers or eight cousins.- J.B.S. Haldane, from here.

Saturday, February 28, 2015

Tuesday, February 24, 2015

A Theoretical Case For The Higgs Boson As A Composite Particle Made Of Gauge Bosons

The case for the Higgs boson as a scalar combination of electroweak gauge bosons in a gauge invariant fashion is made in a preprint by F.J. Himpsel (of the University of Wisconsin at Madison Physics Department who primarily works in condensed matter physics; another of his interesting ideas about fundamental physics and additional explanation of the one described in the preprint is found here). The preprint makes a theoretical case for a Higgs boson as a composite object made of gauge bosons whose mass is half of the Higgs vacuum expectation value (vev) at the tree level before adjustment for higher order loops that bridge the gap between the tree level estimate and the experimentally observed value.

The paper is notable because it takes these related notions from being mere numerology, to something with some plausible theoretical foundation and can close the gaps between rough first order theoretical estimates and reality in a precise, calculable, falsifiable way. There is a lot of suggestive evidence that he is barking up the right tree. And, there is much to like about the idea of a Higgs boson as a composite of the Standard Model gauge bosons as discussed in the final section of this post.

The preprint discusses that the Higgs boson mass is close to half of the Higgs vev, 123 GeV (actually closer to 123.11 GeV), a 2% difference. This gap is material, on the order of a five sigma difference from the experimentally measured value, but the explanation for the discrepancy is interesting and plausible (some mathematical symbols translated into words):

The combined margin of error weighted experimental value of the Higgs boson mass as of the latest updated results from the LHC in September of 2014 is 125.17 GeV with a one sigma margin of error in the vicinity of about 0.3 GeV-0.5 GeV. In other words, there is at least a 95% probability that the true Higgs boson mass is between 124.17 GeV and 126.17 GeV, and the 95% probability range is probably closer to 124.37 GeV and 125.97 GeV.

The experimentally measured value of the Higgs vev is 246.2279579 +/- 0.0000010 GeV. Conceptually, this is a function of the SU(2) electroweak force coupling constant g and the W boson mass. In practice, it is determined using precision measurements of muon decays.

The other fundamental particle masses in the Standard Model are as follows:

* The top quark mass is 173.34 +/- 0.76 GeV (determined based upon the data from the CDF and D0 experiments at the now closed Tevatron collider and the ATLAS And CMS experiments at the Large Hadron Collider as of March 20, 2014).

* The bottom quark mass is 4.18 +/- 0.03 GeV (per the Particle Data Group). A recent QCD study has claimed, however, that the bottom quark mass is actually 4.169 +/- 0.008 GeV.

* The charm quark mass is 1.275 +/- 0.025 GeV (per the Particle Data Group). A recent QCD study has claimed, however, that the charm quark mass is actually 1.273 +/- 0.006 GeV.

* The strange quark mass is 0.095 +/- 0.005 GeV (per the Particle Data Group).

* The up quark mass and down quark mass, are each less than 0.01 GeV with more than 95% confidence, although the up quark mass and down quark pole masses are ill defined and instead are usually reported at an energy scale of 2 GeV.

* The tau charged lepton mass is 1.77682 +/- 0.00016 GeV (per the Particle Data Group).

* The muon mass is 0.1056583715 +/- 0.0000000035 GeV (per the Particle Data Group).

* The electron mass is 0.000510998928 +/- 0.000000000011 GeV (per the Particle Data Group).

* Each of the three Standard Model neutrino mass eigenstates (regardless of the neutrino mass hierarchy that proves to be correct) is less than 0.000000001 GeV.

* The W boson mass is 80.365 +/- 0.015 GeV.

* The Z boson mass is 91.1876 +/- 0.021 GeV.

* Photons and gluons have an exactly zero rest mass (as does the hypothetical graviton).

The only other experimentally determined Standard Model constants not set forth above are:

* The strong force coupling constant (about 0.1185 +/- 0.0006 at the Z boson mass energy scale per the Particle Data Group).

* The U(1) electroweak coupling constant g', which is known with exquisite precision. I believe that the value of g is about 0.65293 and that the value of g' is about 0.34969 (both of which are known with much greater precision).

* The four parameters of the CKM matrix, which are known with considerable precision. In the Wolfenstein parameterization, they are λ = 0.22537 ± 0.00061 , A = 0.814+0.023 −0.024, ρ¯ = 0.117 ± 0.021 , η¯ = 0.353 ± 0.013.

* The four parameters of the PMNS matrix; three of which are known with moderate accuracy. These three parameters are theta12=33.36 +0.81/-0.78 degrees, theta23=40.0+2.1/-1/5 degrees or 50.4+1.3/-1.3 degrees, and theta12=8.66+0,44/-0.46 degrees.

None of these are pertinent to the issues discussed in this post. All of these except the CP violating phase of the PMNS matrix and the quadrant of one of the PMNS matrix parameters has been measured with reasonable precision.

General relativity involves two constants (Newton's constant which is 6.67384(80)×10−11 m3*kg−1*s−2 and the cosmological constant which is approximately 10-52 m-2).

In addition, Planck's constant (6.62606957(29)×10−34 J*s) and the speed of light (299792458 m*s−1) are experimentally measured constants (even though the speed of light's value is now part of the definition of the meter), that are known with great precision and which must be known to do fundamental physics in the Standard Model and/or General Relativity.

A small number of additional experimentally determined constants may be necessary to describe dark matter phenomena and cosmological inflation.

A Higgs Boson Mass Numerology Recap

The intermediate equations in the tree-level analysis in the paper suggests how the 125.98 +/- 0.03 GeV/c^2 value that the simple 2H=2W+Z formula suggests could be close to the actual result. If this similar formula were true, the combined electroweak fits of the W boson mass, top quark mass and Higgs boson mass favor a value at the low end of that range, perhaps 125.95 GeV/c^2.

* * *

An alternative possibility, in which one half of the Higgs boson mass equals exactly the sum of the squares of the massive fundamental bosons of the Standard Model (and the sum of the squares of the masses of the fundamental fermions has the same value as the sum of the squares of the boson masses), i.e., for Higgs vev=V, Higgs boson mass=H, W boson mass=W, and Z boson mass=Z:

H^2=(V^2)/2-W^2-Z^2, implies a Higgs boson mass of 124.648 +/- 0.010 GeV, with combined combined electroweak fits favoring a value at the high end of this range (perhaps 124.658 GeV). (This would imply a top quark mass of about 174.1 GeV which is consistent with the current best estimates of the top quark mass; a slightly lower top quark mass of 173.1 GeV would be implied if the Higgs boson mass were, for instance, 125.95 GeV; both of these top quark masses are within 1 standard deviation of the experimentally measured value of the top quark mass).

This could also have roots in the analysis in the paper, which includes half of the square of the Higgs vev, the W boson mass, and the Z boson mass in its analysis (and has terms for the photon mass that drop out because the photon has a zero mass).

* * *

Thus, both alternative possibilities (2H=2W+Z and sum of F^2= sum of B^2= 1/2 Higgs vev) are roughly consistent with the experimental evidence, although they are clearly inconsistent with each other using pole masses for each boson.

It is also possible, however, that the correct scale at which to evaluate the masses in these formulas might, for example, be closer to the Higgs vev energy scale of about 246 GeV, and that at that scale, both formulas might be true simultaneously. Renormalization of masses as energy scales increase shrink the fundamental gauge boson masses more rapidly than they shrink the fundamental fermion masses, and an energy scale at which the sums of the squares of the fermion masses equal the sum of the squares of the boson masses is less than 1,000 GeV (i.e. 1 TeV) and not less than the value determined using the pole masses of the respective fundamental particles.

In a naive calculation using the best known values of the fundamental fermion and boson masses, the sum of the square of the fermion masses are not quite equal to the sum of the square of the boson masses (including the Higgs boson mass). But, the uncertainties in the top quark mass, the Higgs boson mass, and the W boson mass (in that order) are sufficient to make it unclear how the sum of the square of the fermion masses relate to the sum of the square of the boson masses either at pole masses or at any other energy scale. The uncertainties in these three squared masses, predominate over any uncertainties in the masses of the other 11 fermions, the Z boson (whose mass is known with seven times more precision than the W boson despite having a higher absolute value), and uncertainty in the value of the Higgs vev.

If these top quark and Higgs boson mass measurements could be made about three times more precise than the current state of the art experimental measurments, most of the current uncertainty regarding the nature of the Higgs boson mass and the relationship of the fundamental particle masses to the Higgs vev could be eliminated. The remainder of the LHC experiments will almost surely improve the accuracy of both of these measurements, but may be hard pressed to improve them by that much before these experiments are completed.

What Does A Composite Higgs Boson Hypothesis Imply?

It is always a plus to be able to derive any experimentally determined Standard Model parameter from other Standard Model parameters, reducing the number of degrees of freedom in the theory. This would make electroweak unification even more elegant.

More deeply, if the Higgs boson is merely a composite of the Standard Model electroweak gauge bosons, then:

(1) The hierarchy problem as conventionally posed evaporates, because the Higgs boson mass itself is no longer fine tuned. The profound fine tuning of the Higgs boson mass in the Standard Model is the gist of the hierarchy problem. The demise of the hierarchy problem removes an important motivation for SUSY theories.

(2) Some of the issues associated with the hierarchy problem migrate to the question of why the W and Z boson mass scales are what they are, but if the Higgs boson mass works out to be such that the fermionic and bosonic contributions to the Higgs vev are identical, then the W and Z boson masses are a function of the fermion masses and an electroweak mixing angle, or equivalently, the fermion masses are a function of the electroweak boson masses and the texture of the fermion mass matrix.

(3) The spotlight in the mystery of the nature of the fermion mass matrix would cease to be arbitrary coupling constants with the Higgs boson and return squarely to W boson interactions which are the only means in the Standard Model by which fermions of one flavor can transform into fermions of another flavor.

(4) There are no longer any fundamental Standard Model bosons that are not spin-1 (the hypothetical spin-2 graviton is not part of the Standard Model). All fundamental Standard Model fermions are spin-1/2. Eliminating a fundamental spin-0 boson from the Standard Model changes the Lie groups and Lie algebras which can generate the Standard Model fundamental particles without excessive or missing particles in a grand unified theory.

(5) If the Higgs boson couplings are derivative of the W and Z boson couplings, then neutrinos, which couple with the W and Z boson, although only via the weak force, should derive their masses via the composite Higgs boson mechanism, just as other fermions in the Standard Model do. This implies that neutrinos should have Dirac mass just like other particles that derive their masses from the same interactions.

(6) A composite Higgs boson makes models with additional Higgs doublets less plausible, except to the extent that different combinations of fundamental Standard Model gauge bosons can generate these exotic Higgs bosons, which if they can, would then have masses and other properties that could be determined from first principles and would be divorced from supersymmetry theories.

(7) A composite Higgs boson might slightly tweak the running of the Standard Model coupling constants, influencing gauge unification at high energies (as could quantum gravity effects). A slight tweak of 1-2% to the running of one or more of the coupling constants over from the electroweak scale to the Planck or GUT scale (more than a dozen orders of magnitude), is all that would be necessary for the Standard Model coupling constants to unify at some extremely high energy. It may also have implications for the unstable v. metastable v. stable nature of the vacuum.

The paper is notable because it takes these related notions from being mere numerology, to something with some plausible theoretical foundation and can close the gaps between rough first order theoretical estimates and reality in a precise, calculable, falsifiable way. There is a lot of suggestive evidence that he is barking up the right tree. And, there is much to like about the idea of a Higgs boson as a composite of the Standard Model gauge bosons as discussed in the final section of this post.

The preprint discusses that the Higgs boson mass is close to half of the Higgs vev, 123 GeV (actually closer to 123.11 GeV), a 2% difference. This gap is material, on the order of a five sigma difference from the experimentally measured value, but the explanation for the discrepancy is interesting and plausible (some mathematical symbols translated into words):

The resulting value MH = ½v = 123 GeV matches the observed Higgs mass of 126 GeV to about 2%. A comparable agreement exists between the tree-level mass of the W gauge boson MW = ½gv = 78.9 GeV in (8) and its observed mass of 80.4 GeV. Such an accuracy is typical of the tree-level approximation, which neglects loop corrections of the order the weak force coupling constant = g^2/4pi which is approximately equal to 3%. It is reassuring to see the Higgs mass emerging directly from the concept of a Higgs boson composed of gauge bosons.The summary at the end of the paper notes that:

In summary, a new concept is proposed for electroweak symmetry breaking, where the Higgs boson is identified with a scalar combination of gauge bosons in gauge invariant fashion. That explains the mass of the Higgs boson with 2% accuracy. In order to replace the standard Higgs scalar, the Brout-Englert-Higgs mechanism of symmetry breaking is generalized from scalars to vectors. The ad-hoc Higgs potential of the standard model is replaced by self-interactions of the SU(2) gauge bosons which can be calculated without adjustable parameters. This leads to finite VEVs of the transverse gauge bosons, which in turn generate gauge boson masses and self-interactions. Since gauge bosons and their interactions are connected directly to the symmetry group of a theory via the adjoint representation and gauge-invariant derivatives, the proposed mechanism of dynamical symmetry breaking is applicable to any non-abelian gauge theory, including grand unified theories and supersymmetry.Background: The Standard Model Constants

In order to test this model, the gauge boson self-interactions need to be worked out. These are the self-energies [of the W+/- and Z bosons] and the four-fold vertex corrections [of the WW,WZ, and ZZ boson combinations]. The VEV of the standard Higgs boson which generates masses for the gauge bosons and for the Higgs itself is now replaced by the VEVs acquired by the W+/- and Z gauge bosons via dynamical symmetry breaking. Since the standard Higgs boson interacts with most of the fundamental particles, its replacement implies rewriting a large portion of the standard model. Approximate results may be obtained by calculating gauge boson self-interactions within the standard model, assuming that the contribution of the standard Higgs boson is small for low-energy phenomena. The upcoming high-energy run of the LHC offers a great opportunity to test the characteristic couplings of the composite Higgs boson, as well as the new gauge boson couplings introduced by their VEVs. If confirmed, the concept of a Higgs boson composed of gauge bosons would open the door to escape the confine of the standard model and calculate previously inaccessible masses and couplings, such as the Higgs mass and its couplings.

The combined margin of error weighted experimental value of the Higgs boson mass as of the latest updated results from the LHC in September of 2014 is 125.17 GeV with a one sigma margin of error in the vicinity of about 0.3 GeV-0.5 GeV. In other words, there is at least a 95% probability that the true Higgs boson mass is between 124.17 GeV and 126.17 GeV, and the 95% probability range is probably closer to 124.37 GeV and 125.97 GeV.

The experimentally measured value of the Higgs vev is 246.2279579 +/- 0.0000010 GeV. Conceptually, this is a function of the SU(2) electroweak force coupling constant g and the W boson mass. In practice, it is determined using precision measurements of muon decays.

The other fundamental particle masses in the Standard Model are as follows:

* The top quark mass is 173.34 +/- 0.76 GeV (determined based upon the data from the CDF and D0 experiments at the now closed Tevatron collider and the ATLAS And CMS experiments at the Large Hadron Collider as of March 20, 2014).

* The bottom quark mass is 4.18 +/- 0.03 GeV (per the Particle Data Group). A recent QCD study has claimed, however, that the bottom quark mass is actually 4.169 +/- 0.008 GeV.

* The charm quark mass is 1.275 +/- 0.025 GeV (per the Particle Data Group). A recent QCD study has claimed, however, that the charm quark mass is actually 1.273 +/- 0.006 GeV.

* The strange quark mass is 0.095 +/- 0.005 GeV (per the Particle Data Group).

* The up quark mass and down quark mass, are each less than 0.01 GeV with more than 95% confidence, although the up quark mass and down quark pole masses are ill defined and instead are usually reported at an energy scale of 2 GeV.

* The tau charged lepton mass is 1.77682 +/- 0.00016 GeV (per the Particle Data Group).

* The muon mass is 0.1056583715 +/- 0.0000000035 GeV (per the Particle Data Group).

* The electron mass is 0.000510998928 +/- 0.000000000011 GeV (per the Particle Data Group).

* Each of the three Standard Model neutrino mass eigenstates (regardless of the neutrino mass hierarchy that proves to be correct) is less than 0.000000001 GeV.

* The W boson mass is 80.365 +/- 0.015 GeV.

* The Z boson mass is 91.1876 +/- 0.021 GeV.

* Photons and gluons have an exactly zero rest mass (as does the hypothetical graviton).

The only other experimentally determined Standard Model constants not set forth above are:

* The strong force coupling constant (about 0.1185 +/- 0.0006 at the Z boson mass energy scale per the Particle Data Group).

* The U(1) electroweak coupling constant g', which is known with exquisite precision. I believe that the value of g is about 0.65293 and that the value of g' is about 0.34969 (both of which are known with much greater precision).

* The four parameters of the CKM matrix, which are known with considerable precision. In the Wolfenstein parameterization, they are λ = 0.22537 ± 0.00061 , A = 0.814+0.023 −0.024, ρ¯ = 0.117 ± 0.021 , η¯ = 0.353 ± 0.013.

* The four parameters of the PMNS matrix; three of which are known with moderate accuracy. These three parameters are theta12=33.36 +0.81/-0.78 degrees, theta23=40.0+2.1/-1/5 degrees or 50.4+1.3/-1.3 degrees, and theta12=8.66+0,44/-0.46 degrees.

None of these are pertinent to the issues discussed in this post. All of these except the CP violating phase of the PMNS matrix and the quadrant of one of the PMNS matrix parameters has been measured with reasonable precision.

General relativity involves two constants (Newton's constant which is 6.67384(80)×10−11 m3*kg−1*s−2 and the cosmological constant which is approximately 10-52 m-2).

In addition, Planck's constant (6.62606957(29)×10−34 J*s) and the speed of light (299792458 m*s−1) are experimentally measured constants (even though the speed of light's value is now part of the definition of the meter), that are known with great precision and which must be known to do fundamental physics in the Standard Model and/or General Relativity.

A small number of additional experimentally determined constants may be necessary to describe dark matter phenomena and cosmological inflation.

A Higgs Boson Mass Numerology Recap

The intermediate equations in the tree-level analysis in the paper suggests how the 125.98 +/- 0.03 GeV/c^2 value that the simple 2H=2W+Z formula suggests could be close to the actual result. If this similar formula were true, the combined electroweak fits of the W boson mass, top quark mass and Higgs boson mass favor a value at the low end of that range, perhaps 125.95 GeV/c^2.

* * *

An alternative possibility, in which one half of the Higgs boson mass equals exactly the sum of the squares of the massive fundamental bosons of the Standard Model (and the sum of the squares of the masses of the fundamental fermions has the same value as the sum of the squares of the boson masses), i.e., for Higgs vev=V, Higgs boson mass=H, W boson mass=W, and Z boson mass=Z:

H^2=(V^2)/2-W^2-Z^2, implies a Higgs boson mass of 124.648 +/- 0.010 GeV, with combined combined electroweak fits favoring a value at the high end of this range (perhaps 124.658 GeV). (This would imply a top quark mass of about 174.1 GeV which is consistent with the current best estimates of the top quark mass; a slightly lower top quark mass of 173.1 GeV would be implied if the Higgs boson mass were, for instance, 125.95 GeV; both of these top quark masses are within 1 standard deviation of the experimentally measured value of the top quark mass).

This could also have roots in the analysis in the paper, which includes half of the square of the Higgs vev, the W boson mass, and the Z boson mass in its analysis (and has terms for the photon mass that drop out because the photon has a zero mass).

* * *

Thus, both alternative possibilities (2H=2W+Z and sum of F^2= sum of B^2= 1/2 Higgs vev) are roughly consistent with the experimental evidence, although they are clearly inconsistent with each other using pole masses for each boson.

It is also possible, however, that the correct scale at which to evaluate the masses in these formulas might, for example, be closer to the Higgs vev energy scale of about 246 GeV, and that at that scale, both formulas might be true simultaneously. Renormalization of masses as energy scales increase shrink the fundamental gauge boson masses more rapidly than they shrink the fundamental fermion masses, and an energy scale at which the sums of the squares of the fermion masses equal the sum of the squares of the boson masses is less than 1,000 GeV (i.e. 1 TeV) and not less than the value determined using the pole masses of the respective fundamental particles.

In a naive calculation using the best known values of the fundamental fermion and boson masses, the sum of the square of the fermion masses are not quite equal to the sum of the square of the boson masses (including the Higgs boson mass). But, the uncertainties in the top quark mass, the Higgs boson mass, and the W boson mass (in that order) are sufficient to make it unclear how the sum of the square of the fermion masses relate to the sum of the square of the boson masses either at pole masses or at any other energy scale. The uncertainties in these three squared masses, predominate over any uncertainties in the masses of the other 11 fermions, the Z boson (whose mass is known with seven times more precision than the W boson despite having a higher absolute value), and uncertainty in the value of the Higgs vev.

If these top quark and Higgs boson mass measurements could be made about three times more precise than the current state of the art experimental measurments, most of the current uncertainty regarding the nature of the Higgs boson mass and the relationship of the fundamental particle masses to the Higgs vev could be eliminated. The remainder of the LHC experiments will almost surely improve the accuracy of both of these measurements, but may be hard pressed to improve them by that much before these experiments are completed.

What Does A Composite Higgs Boson Hypothesis Imply?

It is always a plus to be able to derive any experimentally determined Standard Model parameter from other Standard Model parameters, reducing the number of degrees of freedom in the theory. This would make electroweak unification even more elegant.

More deeply, if the Higgs boson is merely a composite of the Standard Model electroweak gauge bosons, then:

(1) The hierarchy problem as conventionally posed evaporates, because the Higgs boson mass itself is no longer fine tuned. The profound fine tuning of the Higgs boson mass in the Standard Model is the gist of the hierarchy problem. The demise of the hierarchy problem removes an important motivation for SUSY theories.

(2) Some of the issues associated with the hierarchy problem migrate to the question of why the W and Z boson mass scales are what they are, but if the Higgs boson mass works out to be such that the fermionic and bosonic contributions to the Higgs vev are identical, then the W and Z boson masses are a function of the fermion masses and an electroweak mixing angle, or equivalently, the fermion masses are a function of the electroweak boson masses and the texture of the fermion mass matrix.

(3) The spotlight in the mystery of the nature of the fermion mass matrix would cease to be arbitrary coupling constants with the Higgs boson and return squarely to W boson interactions which are the only means in the Standard Model by which fermions of one flavor can transform into fermions of another flavor.

(4) There are no longer any fundamental Standard Model bosons that are not spin-1 (the hypothetical spin-2 graviton is not part of the Standard Model). All fundamental Standard Model fermions are spin-1/2. Eliminating a fundamental spin-0 boson from the Standard Model changes the Lie groups and Lie algebras which can generate the Standard Model fundamental particles without excessive or missing particles in a grand unified theory.

(5) If the Higgs boson couplings are derivative of the W and Z boson couplings, then neutrinos, which couple with the W and Z boson, although only via the weak force, should derive their masses via the composite Higgs boson mechanism, just as other fermions in the Standard Model do. This implies that neutrinos should have Dirac mass just like other particles that derive their masses from the same interactions.

(6) A composite Higgs boson makes models with additional Higgs doublets less plausible, except to the extent that different combinations of fundamental Standard Model gauge bosons can generate these exotic Higgs bosons, which if they can, would then have masses and other properties that could be determined from first principles and would be divorced from supersymmetry theories.

(7) A composite Higgs boson might slightly tweak the running of the Standard Model coupling constants, influencing gauge unification at high energies (as could quantum gravity effects). A slight tweak of 1-2% to the running of one or more of the coupling constants over from the electroweak scale to the Planck or GUT scale (more than a dozen orders of magnitude), is all that would be necessary for the Standard Model coupling constants to unify at some extremely high energy. It may also have implications for the unstable v. metastable v. stable nature of the vacuum.

The Proca Model and Podolsky Generalized Electrodynamics

Background and Motivation

The Standard Model, and in particular, the quantum electrodynamics (QED) component of the Standard Model, assumes that the photon does not have mass (although photons do, of course, have energy, and hence are subject to gravity in general relativity in which gravity acts upon both matter and energy).

Now, almost nobody seriously thinks that the assumption of QED that the photon is massless is wrong, because the predictions of QED are more precise, and are indeed more precisely experimentally tested than any other part of the Standard Model, or for that matter almost anything in experimental physics whatsoever. There is no meaningful experimental or theoretical impetus to make the assumption that the photon is massless.

But, generalizations of Standard Model physics that parameterize deviations from the Standard Model expectation, provide a useful tool for devising experimental tests to confirm or contradict the Standard Model and to quantify how much deviation from the Standard Model has been experimentally excluded.

Also, any time one can demonstrate that it is possible to have a new kind of force with a massive carrier boson that behaves in a manner very much like QED, but not exactly like QED, that is theoretically rigorous, these theories, once their implications are understood, can be considered as possible explanations for explaining unsolved problems in physics.

For example, many investigators have considered a massive "dark photon" as a means by which dark matter fermions could be self-interacting very similar to the models discussed below, because self-interacting dark matter models seem to be better at reproducing the dark matter phenomena that astronomers observe, than dark matter models in which dark matter fermions interact solely via gravity and Fermi contact forces (i.e. the physical effects of the fact that two fermions can't be in the same place at the same time) with other particles and with each other.

The Proca Model and Podolsky Generalized Electrodynamics

A paper published in 2011 and just posted to arVix today for some reason evaluates the experimental limitations on this assumption.

The Proca model of Romanian physicist Alexandru Proca (who became a naturalized French citizen later in life when he married a French woman) was developed on the eve of World War II, mostly from 1936-1938 considers a modification of QED in which the photon has a tiny, but non-zero mass. Proca's equations still have utility because they describe the motion of vector mesons and the weak force bosons, both of which are massive spin-1 particles that operate only at short ranges as a result of their mass and short mean lifetimes.

Experimental evidence excludes the possibility that photons have Proca mass (according to the 2011 paper linked above and cited below) down to masses of about 10-39 grams (which is roughly equivalent to 10-6 eV/c2). This is on the order of 10,000 lighter than the average of the three neutrino masses (an average which varies by about a factor of ten between normal, inverted and degenerate mass hierarchies). The exclusion (assuming that no mass is discovered) would be about 100 times more stringent if an experiment proposed in 2007 is carried out. This exclusion for Proca mass is roughly equal to the energy of a photon with a 3 GHz frequency (the frequency of UHF electromagnetic waves used to broadcast television transmissions); visible light has more energy and a roughly 300 THz frequency (10,000 times more energetic).

The Particle Data Group's best estimate of the maximum mass of the photon is much smaller than the limit cited in the 2011 article, with a mass of less than 10-18 eV/c2 from a 2007 paper (twelve orders of magnitude more strict). A 2006 study cited by not relied upon by PDG claimed a limit ten times as strong. A footnote at the PDG entry based on some other 2007 papers notes that a much stronger limit can be imposed if a photon acquires mass at all scales by a means other than the Higgs mechanism (with formatting conventions for small numbers adjusted to be consistent with this post):

But, it is possible to find a loophole in the assumption that a massless photon would break the gauge symmetry of QED. Specifically, the Podolsky Generalized Electrodynamics model, proposed in the 1940s by Podolsky, incorporates a massive photon in a manner that does not break gauge symmetry. In this model, the photon has both a massless and massive mode, with the former interpreted as a photon and the later tentatively associated with the neutrino by the Podolsky when the model was formulated (an interpretation that has since been abandoned for a variety of reasons). In the Podolsky Generalized Electrodynamics model, Coulomb's inverse square law describing the electric force of a point charge is slightly modified. Podolosky Generalized Electrodynamics is equivalent to QED in the limit as Podolsky's constant "a" approaches zero.

Podolosky Generalized Electrodynamics is also notable because it can be derived as an alternative solution to one that uses a set of very basic assumptions to derive Maxwell's Equations from first principles, and does so in a manner that prevents the infinities found in QED because it has a point source (which Feynman and others solved for practical purposes with the technique of renormalization) from arising.

In the Podolsky Generalized Electrodynamics model, there is a constant "a" with units of length associated with the massive mode of the photon must have a value that is experimentally required to be smaller than the current sensitivity of any current experiments. But, "a" is required as a consequence of the "value of the ground state energy of the Hydrogen atom . . . to be smaller than 5.6 fm, or in energy scales larger than 35.51 MeV."

In practice (for reasons that are not obvious without reading the full paper), this means that deviations from QED due to a non-zero value of "a" could be observed only at high energy particle accelerators.

It isn't inconceivable, however, to imagine that Podolsky's constant had a value on the order of the Planck length (i.e. 1.6 * 10-35 meters), which would be manifest only at energies approaching the Planck energy which is far beyond the capacity of any man made experiment to create, a value which could be correct without violating any current experimental constraints that have been rigorously analyzed to date.

Selected References

* B. Podolsky, 62 Phys. Rev. 68 (1942).

* B. Podolsky, C. Kikuchi, 65 Phys. Rev. 228 (1944).

* B. Podolsky, P. Schwed, 20 Rev. Mod. Phys. 40 (1948).

* R. R. Cuzinatto, C. A. M. de Melo, L. G. Medeiros, P. J. Pompeia, "How can one probe Podolsky Electrodynamics?", 26 International Journal of Modern Physics A 3641-3651 (2011) (arVix preprint linked to in post).

The Standard Model, and in particular, the quantum electrodynamics (QED) component of the Standard Model, assumes that the photon does not have mass (although photons do, of course, have energy, and hence are subject to gravity in general relativity in which gravity acts upon both matter and energy).

Now, almost nobody seriously thinks that the assumption of QED that the photon is massless is wrong, because the predictions of QED are more precise, and are indeed more precisely experimentally tested than any other part of the Standard Model, or for that matter almost anything in experimental physics whatsoever. There is no meaningful experimental or theoretical impetus to make the assumption that the photon is massless.

But, generalizations of Standard Model physics that parameterize deviations from the Standard Model expectation, provide a useful tool for devising experimental tests to confirm or contradict the Standard Model and to quantify how much deviation from the Standard Model has been experimentally excluded.

Also, any time one can demonstrate that it is possible to have a new kind of force with a massive carrier boson that behaves in a manner very much like QED, but not exactly like QED, that is theoretically rigorous, these theories, once their implications are understood, can be considered as possible explanations for explaining unsolved problems in physics.

For example, many investigators have considered a massive "dark photon" as a means by which dark matter fermions could be self-interacting very similar to the models discussed below, because self-interacting dark matter models seem to be better at reproducing the dark matter phenomena that astronomers observe, than dark matter models in which dark matter fermions interact solely via gravity and Fermi contact forces (i.e. the physical effects of the fact that two fermions can't be in the same place at the same time) with other particles and with each other.

The Proca Model and Podolsky Generalized Electrodynamics

A paper published in 2011 and just posted to arVix today for some reason evaluates the experimental limitations on this assumption.

The Proca model of Romanian physicist Alexandru Proca (who became a naturalized French citizen later in life when he married a French woman) was developed on the eve of World War II, mostly from 1936-1938 considers a modification of QED in which the photon has a tiny, but non-zero mass. Proca's equations still have utility because they describe the motion of vector mesons and the weak force bosons, both of which are massive spin-1 particles that operate only at short ranges as a result of their mass and short mean lifetimes.

Experimental evidence excludes the possibility that photons have Proca mass (according to the 2011 paper linked above and cited below) down to masses of about 10-39 grams (which is roughly equivalent to 10-6 eV/c2). This is on the order of 10,000 lighter than the average of the three neutrino masses (an average which varies by about a factor of ten between normal, inverted and degenerate mass hierarchies). The exclusion (assuming that no mass is discovered) would be about 100 times more stringent if an experiment proposed in 2007 is carried out. This exclusion for Proca mass is roughly equal to the energy of a photon with a 3 GHz frequency (the frequency of UHF electromagnetic waves used to broadcast television transmissions); visible light has more energy and a roughly 300 THz frequency (10,000 times more energetic).

The Particle Data Group's best estimate of the maximum mass of the photon is much smaller than the limit cited in the 2011 article, with a mass of less than 10-18 eV/c2 from a 2007 paper (twelve orders of magnitude more strict). A 2006 study cited by not relied upon by PDG claimed a limit ten times as strong. A footnote at the PDG entry based on some other 2007 papers notes that a much stronger limit can be imposed if a photon acquires mass at all scales by a means other than the Higgs mechanism (with formatting conventions for small numbers adjusted to be consistent with this post):

When trying to measure m one must distinguish between measurements performed on large and small scales. If the photon acquires mass by the Higgs mechanism, the large-scale behavior of the photon might be effectively Maxwellian. If, on the other hand, one postulates the Proca regime for all scales, the very existence of the galactic field implies m < 10-26 eV/c2, as correctly calculated by YAMAGUCHI 1959 and CHIBISOV 1976.Ordinarily a massive photon would break the gauge symmetry of QED, which would be inconsistent with all sorts of experimentally confirmed theoretical predictions that rely upon the fact that the gauge symmetry of QED is unbroken.

But, it is possible to find a loophole in the assumption that a massless photon would break the gauge symmetry of QED. Specifically, the Podolsky Generalized Electrodynamics model, proposed in the 1940s by Podolsky, incorporates a massive photon in a manner that does not break gauge symmetry. In this model, the photon has both a massless and massive mode, with the former interpreted as a photon and the later tentatively associated with the neutrino by the Podolsky when the model was formulated (an interpretation that has since been abandoned for a variety of reasons). In the Podolsky Generalized Electrodynamics model, Coulomb's inverse square law describing the electric force of a point charge is slightly modified. Podolosky Generalized Electrodynamics is equivalent to QED in the limit as Podolsky's constant "a" approaches zero.

Podolosky Generalized Electrodynamics is also notable because it can be derived as an alternative solution to one that uses a set of very basic assumptions to derive Maxwell's Equations from first principles, and does so in a manner that prevents the infinities found in QED because it has a point source (which Feynman and others solved for practical purposes with the technique of renormalization) from arising.

In the Podolsky Generalized Electrodynamics model, there is a constant "a" with units of length associated with the massive mode of the photon must have a value that is experimentally required to be smaller than the current sensitivity of any current experiments. But, "a" is required as a consequence of the "value of the ground state energy of the Hydrogen atom . . . to be smaller than 5.6 fm, or in energy scales larger than 35.51 MeV."

In practice (for reasons that are not obvious without reading the full paper), this means that deviations from QED due to a non-zero value of "a" could be observed only at high energy particle accelerators.

It isn't inconceivable, however, to imagine that Podolsky's constant had a value on the order of the Planck length (i.e. 1.6 * 10-35 meters), which would be manifest only at energies approaching the Planck energy which is far beyond the capacity of any man made experiment to create, a value which could be correct without violating any current experimental constraints that have been rigorously analyzed to date.

Selected References

* B. Podolsky, 62 Phys. Rev. 68 (1942).

* B. Podolsky, C. Kikuchi, 65 Phys. Rev. 228 (1944).

* B. Podolsky, P. Schwed, 20 Rev. Mod. Phys. 40 (1948).

* R. R. Cuzinatto, C. A. M. de Melo, L. G. Medeiros, P. J. Pompeia, "How can one probe Podolsky Electrodynamics?", 26 International Journal of Modern Physics A 3641-3651 (2011) (arVix preprint linked to in post).

Monday, February 23, 2015

Occam's Razor v. Clinton's Rule In Physics

[T]he A particle is one of the five physical states arising in the Higgs boson sector if you admit to add to the Standard Model Lagrangian density two doublets of complex scalar fields instead than just one.From Quantum Diaries Survivor (emphasis in the original) discussing the paper previously blogged here in this post (note that the physicist author's first language is Italian, not English).

Why should we be such perverts ? I.e., if the theory works fine with fewer parameters, why adding more? Well: the answer is the same as that given by Clinton when he was asked why he seduced an intern... Because we could.

In other physics news, a new paper sketches out the main current issues of active investigation in QCD.

And, a new analysis determines that the latest potential signs of SUSY at the LHC aren't. A new CMS study of a quite generalized class of high energy proton-proton collisions with missing traverse energy, an opposite sign lepton pair, and jets, likewise fails to detect hints of SUSY and sets new SUSY limits (e,g., excluding gluino masses of less than about 900 GeV for neutralinos of under 200 GeV, excluding gluino masses up to 1100 GeV for neutralino masses of up to about 800 GeV, and excluding bottom squark masses of 250 GeV-350 GeV and 450 GeV to 650 GeV subject to various assumptions).

Sunday, February 22, 2015

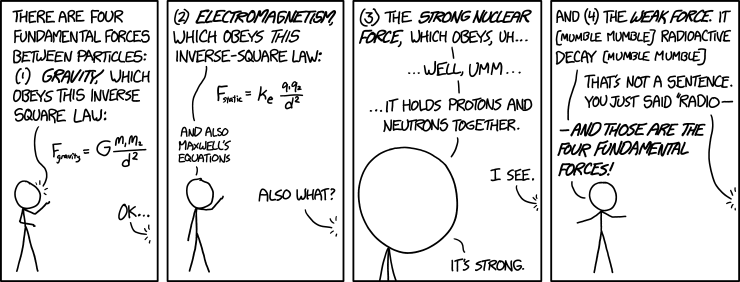

True Statements About Fundamental Physics

Mouse over:

"Of these four forces, there's one we don't fully understand."

"Is it the weak force or the strong --"

"It's gravity."

------------------

Seriously, a hundred years after Einstein published his paper on General Relativity, this is still the most problematic of the fundamental forces. The strong force (QCD) is still hard to calculate, but we think we understand it at a fundamental level.

Tuesday, February 17, 2015

Grain As Prehistory

[This is another draft post from the year 2010 that is resurrected without major further research. It too was never posted because of its original, overambitious scope that has been abandoned in this post.]

Mostly, this is a sideshow. Different kinds of wheat (which comes in duploid to hexaploid varieties, with the hexaploid monster genome found in the most commonly consumed varieties) for example, also shows great genetic diversity as measured by numbers of bases, but some of this is a product of how you choose to measure genetic diversity, because the variations aren't simply random mutations.

In fact, other recent research on grain genetics shows that just a handful a key, independent, simple mutations account for the traits that led to the practical differences between the wild plants that were the marginally nourishing ancestors of the world's staple grains (e.g., rice, maize a.ka. corn, and wheat) and the contemporary domesticated varieties that feed the world.

Far more interesting to me is the extent to which a genetic analysis of foods and domesticated animals makes it possible to localize the epicenters of the Neolithic revolution and date the times when the domestication occurred. Modern humans (i.e. post-Neanderthals) transitioned from many tens of thousands of years as a hunter-gatherer society to a farming society at something like a dozen independent locations around the world at roughly the same time give or take a few thousand years. Each location domesticated different plants and animals.

Seeds from domesticated plants can often be recognized on sight, survive thousands of years better than dead animal matter, and can be carbon dated.

Comparisons of the genomes of domesticated plants and animals to wild species usually makes it possible to make a very specific identification of a common wild ancestor, often an ancestors with a quite narrow geographic range. For example, genetic evidence provides strong support for the notion that all maize has a single common domesticated ancestor about 9,000 years ago in a fairly specific highlands part of Southern Mexico.

Similarly, the story of wheat is also a high definition bit of genetic history.

Although corn was domesticated only 8,000 to 10,000 years ago from the grass teosinte, the genetic diversity between any two strains of corn exceeds that found between humans and chimpanzees, species separated by millions of years of evolution. For instance, the strain B73, the agriculturally important and commonly studied variety decoded by the maize genome project, contains 2.3 billion bases, the chemical units that make up DNA. But the genome of a strain of popcorn decoded by researchers in Mexico is 22 percent smaller than B73’s genome.From here.

“You could fit a whole rice genome in the difference between those two strains of corn,” says Virginia Walbot, a molecular biologist at Stanford University.

Mostly, this is a sideshow. Different kinds of wheat (which comes in duploid to hexaploid varieties, with the hexaploid monster genome found in the most commonly consumed varieties) for example, also shows great genetic diversity as measured by numbers of bases, but some of this is a product of how you choose to measure genetic diversity, because the variations aren't simply random mutations.

In fact, other recent research on grain genetics shows that just a handful a key, independent, simple mutations account for the traits that led to the practical differences between the wild plants that were the marginally nourishing ancestors of the world's staple grains (e.g., rice, maize a.ka. corn, and wheat) and the contemporary domesticated varieties that feed the world.

Far more interesting to me is the extent to which a genetic analysis of foods and domesticated animals makes it possible to localize the epicenters of the Neolithic revolution and date the times when the domestication occurred. Modern humans (i.e. post-Neanderthals) transitioned from many tens of thousands of years as a hunter-gatherer society to a farming society at something like a dozen independent locations around the world at roughly the same time give or take a few thousand years. Each location domesticated different plants and animals.

Seeds from domesticated plants can often be recognized on sight, survive thousands of years better than dead animal matter, and can be carbon dated.

Comparisons of the genomes of domesticated plants and animals to wild species usually makes it possible to make a very specific identification of a common wild ancestor, often an ancestors with a quite narrow geographic range. For example, genetic evidence provides strong support for the notion that all maize has a single common domesticated ancestor about 9,000 years ago in a fairly specific highlands part of Southern Mexico.

Similarly, the story of wheat is also a high definition bit of genetic history.

Einkorn wheat was one of the earliest cultivated forms of wheat, alongside emmer wheat (T. dicoccon). Grains of wild einkorn have been found in Epi-Paleolithic sites of the Fertile Crescent. It was first domesticated approximately 9000 BP (9000 BP ≈ 7050 BCE), in the Pre-Pottery Neolithic A or B periods. Evidence from DNA finger-printing suggests einkorn was domesticated near Karacadag in southeast Turkey, an area in which a number of PPNB farming villages have been found.The origins of maize and wheat are known in time to a period of plus or minus a few hundred years, and in place to regions the size of a few contiguous counties. We can trace the origins of the greater Mexican squash-maize-bean triad of staples to the separate but adjacent areas, resolve order they were developed in (squash came first), and determine how quickly this pattern of domestication spread from carbon dated archeological evidence.

The Hittites

[The following post is extracted from a post written on April 14, 2010, but got lost in the shuffle in my drafts folder when its overambitious original scope got out of hand. I am posting it now, retroactively, without modification to reflect new information, so that the sources and facts noted at the time are available for reference purposes. Further edited for style and grammar on February 18, 2015.]

The Pre-Hittites

The capitol of the Hittite empire, where the first documents written in an Indo-European language are found, was Hattusa. Archaeological evidence shows that it was founded sometime between 5000 BCE and 6000 BCE, and Hittite history records the fact that it and the city of Nerik to the North of it, were founded by the non-Indo-European language speaking Hattic people who preceded them.

Both Hattsua and Nerik had been founded by speakers of the non-Indo-European, non-Semitic language called Hattic. Hattic shows similarities to both Northwest (e.g., Abkhaz) and South Caucasian (Kartvelian) languages, and was spoken sometime around the 3rd to 2nd milleniums BCE. The pre-Indo-European language spoken in Eastern Anatolia and the Zargov mountains, which also shows similarities to the languages of the Caucuses was Hurrian.

"Sacred and magical texts from Hattusa were often written in Hattic [and] Hurrian . . .even after Hittite became the norm for other writings." This is similar to the survival of Sumerian for religious purposes until around the 1st century BCE, despite the fact that it was replaced by the Semitic language Akkadian in general use roughly 1800 years earlier.

The Early Hittites

The first historical record of an Indo-European language is of Hittite in eastern Anatolia. An Indo-European Hittite language speaking dynasty dates back to at least 1740 BCE in a central Anatolian city (see generally here).

The Hittites called their own language the "language of Nesa," which is the name in Hittite of the ancient city of Kanesh, about 14 miles Northwest of the modern city of Kayseri in central Anatolia. This was an ancient Anatolian city, of pre-literate non-Indo-European language speaking farmers to which an Akkadian language speaking trading colony attached itself as a suburb for about two hundred years until around 1740 BCE.

Around 1740 BCE, the Assyrian culture ends and a Hittite culture appears in the archeological record, when the city was taken by Pithana, the first known Hittite king.

The Hittites of Nesa conquered this city from Kussara, which is believed to be between the ancient cities of Nesa and Aleppo (which was first occupied as a city around 5000 BCE), which continues to be a major city in Northern Syria also known as Halab. Their first king was described as the king of this one city-state.

His son, Anitta, sacked Hattsua around 1700 BCE and left the earliest known written Hittite inscription. Kanesh is closer to the source of the Red River than Hattsua, which would later become the Hittite capitol, and was ruled at the time of the sack by Hattic king Piyusti whom he defeated. The text of Anitta's inscription translates to:

The Hittites did not inhabit the North Black Sea plain of Anatolia to the Northeast, however. In this region, they were blocked by the Kaskians who make their first appearance three hundred years into the Hittite written record, around 1450 BCE, when they took the Hittite holy city of Nerik to the North of the then Hittite capitol of Hattsua. Less than a century before the Kaskians sacked the city of Nerik and moved to Anatolia, the Kaskians conquered the Indo-European Palaic language speaking people of Northwest Anatolia.

The Kaskians probably hailed from the Eastern shores of the sea of Marmara, which is the small sea between the Black Sea and the Mediterranean. The Kaskians continued to harry the Hittites for centuries, sacking Hattsua ca. 1280 BCE, although Hattsua was retaken as was the city of Nerik which they had again lost to the Kaskians. The Kaskians in an alliance with the Mushki people, toppled the Hittite empire around 1200 BCE, were then repulsed by the Assyrians, and appear to have migrated after being defeated by the Assyrians to the West Caucuses.

The Mushki were a people of Eastern Anatolia or the Caucuses, associated with the earliest history of state formation for Caucasian Georgia and Armenia. This suggests that they were likely non-Indo-European speakers, at least originally, although they may have adopted the local Luwian language of their subjects in Neo-Hittite kingdoms that arose after the fall of the Hittite empire in East Anatolia.

From about 1800 BCE to 1600 BCE, the city of Aleppo ruled the Kingdom of Yamhad based there and ruled by an Amorite dynasty. The Amorites were a linguistically North Semitic people. There had been Amorite dynasties in the same general region for two hundred years before then (i.e. since at least around 2000 BCE). This fell to the Hittites sometime in the following century (i.e. sometime between 1600 BCE and 1500 BCE) and was subsquently close to the boundary between the Egyptians to the Southwest, the Mesopotamian empires to the Southeast, and the Hittites to the North, for hundreds of years. There was a non-Indo-European Hurrian minority in Yamhad that exerted a cultural influence on the Kingdom and on its ruling Amorites who were a Semitic people whose language was probably ancestral to all of the Semitic languages (including Aramahic, Hebrew and Arabic), except Akkadian and language of Ebla, which is midway between Akkadian and the North Semitic language of the Amorites.

The Amorites a.k.a. the Mat.Tu were described as of sometime around the 21st century BCE as follows in Sumerian records (citing E. Chiera, Sumerian Epics and Myths, Chicago, 1934, Nos.58 and 112; E. Chiera, Sumerian Texts of Varied Contents, Chicago, 1934, No.3.)

The ancient Semitic city of Ebla was 34 miles southwest of Aleppo and was destroyed between 2334 BCE and 2154 BCE by an Akkadian king. It had a written language between Akkadian and North Semitic written from around 2500 BCE to 2240 BCE and was a merchant run town trading in wood and textiles whose residents also had a couple hundred thousand herd animals. The early Amorites were known to the people of Elba as "a rural group living in the narrow basion of the middle and upper Euphrates"(original source: Giorgio Bucellati, "Ebla and the Amorites", Eblaitica 3 (New York University) 1992:83-104), although Ebla would later become a subject kingdom of Yamhad. The Akkadian kings campaigned against the Amorites following the fall of Ebla and recognized them as the main people to the West of their empire, whose other neighbors were the Subartu (probably Hurrians) to the Northeast, Sumer (in South Mesopotamia) and Elam (in the Eastern mountains).

The oldest Akkadian writing is found around 2600 BCE.

The Hittite, Mittani and Egyptians at 1400 BC

By around 1400 BCE, the Hittites ruled an area corresponding to the Red River (a.k.a. Kizilirmak basin) (map here citing Cambridge Ancient History Vol II Middle East & Aegean Region 1800-1300. I. E. S. Edwards (Ed) et al. as its source).

Adjacent to the Hittites to the Southeast, the Mittani empire, with commoners who spoke a non-Indo-European language called Hurrian and a ruling class that spoke an Indo-European language very close to Sanskrit, ruled the upper Euphrates and Tigris river valley about as far South as modern day Hadithah and Tikrit in Iraq. The Mittani also had a small piece of the Levant, extending Southwest to roughly the modern boundary between Turkey and Syria.

To the South of the Mittani in the East, the Kassite empire ruled the lower Euphrates and Tigris river valleys. The Kassites were a non-Indo-European Hurrian speaking people from the neighboring Zargos mountains to the East of the Mittani. The Kassites wrested power from the Akkadian empire. The Akkadian empire, and its Semitic Akkadian language, in turn, had replaced the non-Indo-European, non-Semitic Sumerian language used before the Akkadian empire emerged.

To the Southwest of the Mittani were the Egyptians. The Egyptian ruled from the Mittani boundary in the Levant to the greater Nile Delta in Northeast Africa.

The Hittites At Their Peak

Half a century later later, at its greatest extent, under Kings Suppiluliuma I(c.1350–1322) and Mursili II (c.1321–1295), the Hittite Empire included all of Anatolia (including Troy) except the immediate vicinity of modern Istanbul, the Levant from modern Turkey to a little bit North of Beruit on the coast and as far South as what is today Damascus further inland, and the ancient city of Mari, which is situated very close to where the Euphrates river crosses the modern Syrian border. (Map here).

The Hittites had absorbed all of the Mittani empire except some of its lands in the upper Tigris, and extended to the South the Mittani border with Egypt.

The End of the Hittite Empire and the Anatolian languages

A civil War followed by a series of regional events roughly contemporaneous with the Trojan War of the Greek epics and various historical accounts lumped together as part of the "Bronze Age collapse" destroyed the Hittite empire around 1200 BC. The Hittite language is replaced by successor languages after the Hittite empire falls.

Following this peak, one of the Anatolian languages was the Luwian language, which may have been the language of the Trojans. Luwain may have actually been a sister language of Hittite and equally old, as attested by its early use as a liturgical language along with pre-Indo-European languages of the area. Luwain may also have been an evolutionary linguistic predecessor to Hittite proper.

Luwain, in turn, evolved into the Lycian. See Bryce, Trevor R., "The Lycians - Volume I: The Lycians in Literary and Epigraphic Sources" (1986)). Other Anatolian languages including Lycian were successor Anatolian languages to Hittite that were spoken in Anatolia through the first century BCE. Then, in the first century BCE, Alexander the Great conquered an area including Anatolia, and made Greek, which is a neighboring Indo-European language, the language of his kingdom.

Recap of Anatolian History

All of the Indo-European Anatolian languages (with the possible exception of Luwian) spoken from around 1740 BCE to about 100 BCE, when they were replaced by Greek under Alexander the Great, trace their roots to the city-state they established by conquering the pre-existing city of Kanesh in central Anatolia.

There is no evidence for the presence of any Indo-European languages in Anatolia prior to about 2000-1800 BCE, and the available historical record seems to indicate that early Anatolian populations of Indo-Europeans were mere pockets of people at the time who may very likely have been recent arrivals.

Armenian is not an Anatolian language and is most closely related linguistically to Greek, but with many non-Indo-European and Indo-Iranian areal influences. Armenian may have arrived in its current location shortly after the fall of the Hittite empire in a folk migration from Western Anatolia, the Aegean, or the Balkans. It is sometimes associated with the Phrygians.

Post-Script: The Tocharians

[This fragment not about the Hittites is also salvaged from this old post.]

The oldest mummies in the Tarim basin of what is now Ugygur China (i.e. in the Xinjiang Uyghur Autonomous Region) in the far Northeast of modern China also date to 1800 BCE.

Pliny the Elder, in Rome, recounts a first century CE report from an ambassador to China from Ceylon who later served as an ambassador to the Roman empire, that corroborates the existence of people with this appearance and a language unlike those known locally.

The Pre-Hittites

The capitol of the Hittite empire, where the first documents written in an Indo-European language are found, was Hattusa. Archaeological evidence shows that it was founded sometime between 5000 BCE and 6000 BCE, and Hittite history records the fact that it and the city of Nerik to the North of it, were founded by the non-Indo-European language speaking Hattic people who preceded them.

Both Hattsua and Nerik had been founded by speakers of the non-Indo-European, non-Semitic language called Hattic. Hattic shows similarities to both Northwest (e.g., Abkhaz) and South Caucasian (Kartvelian) languages, and was spoken sometime around the 3rd to 2nd milleniums BCE. The pre-Indo-European language spoken in Eastern Anatolia and the Zargov mountains, which also shows similarities to the languages of the Caucuses was Hurrian.

"Sacred and magical texts from Hattusa were often written in Hattic [and] Hurrian . . .even after Hittite became the norm for other writings." This is similar to the survival of Sumerian for religious purposes until around the 1st century BCE, despite the fact that it was replaced by the Semitic language Akkadian in general use roughly 1800 years earlier.

The Early Hittites

The first historical record of an Indo-European language is of Hittite in eastern Anatolia. An Indo-European Hittite language speaking dynasty dates back to at least 1740 BCE in a central Anatolian city (see generally here).

The Hittites called their own language the "language of Nesa," which is the name in Hittite of the ancient city of Kanesh, about 14 miles Northwest of the modern city of Kayseri in central Anatolia. This was an ancient Anatolian city, of pre-literate non-Indo-European language speaking farmers to which an Akkadian language speaking trading colony attached itself as a suburb for about two hundred years until around 1740 BCE.

Around 1740 BCE, the Assyrian culture ends and a Hittite culture appears in the archeological record, when the city was taken by Pithana, the first known Hittite king.

The Hittites of Nesa conquered this city from Kussara, which is believed to be between the ancient cities of Nesa and Aleppo (which was first occupied as a city around 5000 BCE), which continues to be a major city in Northern Syria also known as Halab. Their first king was described as the king of this one city-state.

His son, Anitta, sacked Hattsua around 1700 BCE and left the earliest known written Hittite inscription. Kanesh is closer to the source of the Red River than Hattsua, which would later become the Hittite capitol, and was ruled at the time of the sack by Hattic king Piyusti whom he defeated. The text of Anitta's inscription translates to:

Anitta, Son of Pithana, King of Kussara, speak! He was dear to the Stormgod of Heaven, and when he was dear to the Stormgod of Heaven, the king of Nesa [verb broken off] to the king of Kussara. The king of Kussara, Pithana, came down out of the city in force, and he took the city of Nesa in the night by force. He took the King of Nesa captive, but he did not do any evil to the inhabitants of Nesa; instead, he made them mothers and fathers. After my father, Pithana, I suppresed a revolt in the same year. Whatever lands rose up in the direction of the sunrise, I defeated each of the aforementioned.The capitol of the Hittites is moved to Hattsua within a century or two. The Hittites sacked Babylon around 1595 BCE.

Previously, Uhna, the king of Zalpuwas, had removed our Sius from the city of Nesa to the city of Zalpuwas. But subsequently, I, Anittas, the Great King, brought our Sius back from Zalpuwas to Nesa. But Huzziyas, the king of Zalpuwas, I brought back alive to Nesa. The city of Hattusas [tablet broken] contrived. And I abandoned it. But afterwards, when it suffered famine, my goddess, Halmasuwiz, handed it over to me. And in the night I took it by force; and in its place, I sowed weeds. Whoever becomes king after me and settles Hattusas again, may the Stormgod of Heaven smite him!

The Hittites did not inhabit the North Black Sea plain of Anatolia to the Northeast, however. In this region, they were blocked by the Kaskians who make their first appearance three hundred years into the Hittite written record, around 1450 BCE, when they took the Hittite holy city of Nerik to the North of the then Hittite capitol of Hattsua. Less than a century before the Kaskians sacked the city of Nerik and moved to Anatolia, the Kaskians conquered the Indo-European Palaic language speaking people of Northwest Anatolia.

The Kaskians probably hailed from the Eastern shores of the sea of Marmara, which is the small sea between the Black Sea and the Mediterranean. The Kaskians continued to harry the Hittites for centuries, sacking Hattsua ca. 1280 BCE, although Hattsua was retaken as was the city of Nerik which they had again lost to the Kaskians. The Kaskians in an alliance with the Mushki people, toppled the Hittite empire around 1200 BCE, were then repulsed by the Assyrians, and appear to have migrated after being defeated by the Assyrians to the West Caucuses.

The Mushki were a people of Eastern Anatolia or the Caucuses, associated with the earliest history of state formation for Caucasian Georgia and Armenia. This suggests that they were likely non-Indo-European speakers, at least originally, although they may have adopted the local Luwian language of their subjects in Neo-Hittite kingdoms that arose after the fall of the Hittite empire in East Anatolia.

From about 1800 BCE to 1600 BCE, the city of Aleppo ruled the Kingdom of Yamhad based there and ruled by an Amorite dynasty. The Amorites were a linguistically North Semitic people. There had been Amorite dynasties in the same general region for two hundred years before then (i.e. since at least around 2000 BCE). This fell to the Hittites sometime in the following century (i.e. sometime between 1600 BCE and 1500 BCE) and was subsquently close to the boundary between the Egyptians to the Southwest, the Mesopotamian empires to the Southeast, and the Hittites to the North, for hundreds of years. There was a non-Indo-European Hurrian minority in Yamhad that exerted a cultural influence on the Kingdom and on its ruling Amorites who were a Semitic people whose language was probably ancestral to all of the Semitic languages (including Aramahic, Hebrew and Arabic), except Akkadian and language of Ebla, which is midway between Akkadian and the North Semitic language of the Amorites.

The Amorites a.k.a. the Mat.Tu were described as of sometime around the 21st century BCE as follows in Sumerian records (citing E. Chiera, Sumerian Epics and Myths, Chicago, 1934, Nos.58 and 112; E. Chiera, Sumerian Texts of Varied Contents, Chicago, 1934, No.3.)

The MAR.TU who know no grain.... The MAR.TU who know no house nor town, the boors of the mountains.... The MAR.TU who digs up truffles... who does not bend his knees (to cultivate the land), who eats raw meat, who has no house during his lifetime, who is not buried after death...In other words, the early pre-dynastic Amorites were probably nomadic herders.

They have prepared wheat and gú-nunuz (grain) as a confection, but an Amorite will eat it without even recognizing what it contains!

The ancient Semitic city of Ebla was 34 miles southwest of Aleppo and was destroyed between 2334 BCE and 2154 BCE by an Akkadian king. It had a written language between Akkadian and North Semitic written from around 2500 BCE to 2240 BCE and was a merchant run town trading in wood and textiles whose residents also had a couple hundred thousand herd animals. The early Amorites were known to the people of Elba as "a rural group living in the narrow basion of the middle and upper Euphrates"(original source: Giorgio Bucellati, "Ebla and the Amorites", Eblaitica 3 (New York University) 1992:83-104), although Ebla would later become a subject kingdom of Yamhad. The Akkadian kings campaigned against the Amorites following the fall of Ebla and recognized them as the main people to the West of their empire, whose other neighbors were the Subartu (probably Hurrians) to the Northeast, Sumer (in South Mesopotamia) and Elam (in the Eastern mountains).

The oldest Akkadian writing is found around 2600 BCE.

The Hittite, Mittani and Egyptians at 1400 BC

By around 1400 BCE, the Hittites ruled an area corresponding to the Red River (a.k.a. Kizilirmak basin) (map here citing Cambridge Ancient History Vol II Middle East & Aegean Region 1800-1300. I. E. S. Edwards (Ed) et al. as its source).

Adjacent to the Hittites to the Southeast, the Mittani empire, with commoners who spoke a non-Indo-European language called Hurrian and a ruling class that spoke an Indo-European language very close to Sanskrit, ruled the upper Euphrates and Tigris river valley about as far South as modern day Hadithah and Tikrit in Iraq. The Mittani also had a small piece of the Levant, extending Southwest to roughly the modern boundary between Turkey and Syria.

To the South of the Mittani in the East, the Kassite empire ruled the lower Euphrates and Tigris river valleys. The Kassites were a non-Indo-European Hurrian speaking people from the neighboring Zargos mountains to the East of the Mittani. The Kassites wrested power from the Akkadian empire. The Akkadian empire, and its Semitic Akkadian language, in turn, had replaced the non-Indo-European, non-Semitic Sumerian language used before the Akkadian empire emerged.

To the Southwest of the Mittani were the Egyptians. The Egyptian ruled from the Mittani boundary in the Levant to the greater Nile Delta in Northeast Africa.

The Hittites At Their Peak

Half a century later later, at its greatest extent, under Kings Suppiluliuma I(c.1350–1322) and Mursili II (c.1321–1295), the Hittite Empire included all of Anatolia (including Troy) except the immediate vicinity of modern Istanbul, the Levant from modern Turkey to a little bit North of Beruit on the coast and as far South as what is today Damascus further inland, and the ancient city of Mari, which is situated very close to where the Euphrates river crosses the modern Syrian border. (Map here).

The Hittites had absorbed all of the Mittani empire except some of its lands in the upper Tigris, and extended to the South the Mittani border with Egypt.

The End of the Hittite Empire and the Anatolian languages

A civil War followed by a series of regional events roughly contemporaneous with the Trojan War of the Greek epics and various historical accounts lumped together as part of the "Bronze Age collapse" destroyed the Hittite empire around 1200 BC. The Hittite language is replaced by successor languages after the Hittite empire falls.

Following this peak, one of the Anatolian languages was the Luwian language, which may have been the language of the Trojans. Luwain may have actually been a sister language of Hittite and equally old, as attested by its early use as a liturgical language along with pre-Indo-European languages of the area. Luwain may also have been an evolutionary linguistic predecessor to Hittite proper.

Luwain, in turn, evolved into the Lycian. See Bryce, Trevor R., "The Lycians - Volume I: The Lycians in Literary and Epigraphic Sources" (1986)). Other Anatolian languages including Lycian were successor Anatolian languages to Hittite that were spoken in Anatolia through the first century BCE. Then, in the first century BCE, Alexander the Great conquered an area including Anatolia, and made Greek, which is a neighboring Indo-European language, the language of his kingdom.

Recap of Anatolian History

All of the Indo-European Anatolian languages (with the possible exception of Luwian) spoken from around 1740 BCE to about 100 BCE, when they were replaced by Greek under Alexander the Great, trace their roots to the city-state they established by conquering the pre-existing city of Kanesh in central Anatolia.

There is no evidence for the presence of any Indo-European languages in Anatolia prior to about 2000-1800 BCE, and the available historical record seems to indicate that early Anatolian populations of Indo-Europeans were mere pockets of people at the time who may very likely have been recent arrivals.

Armenian is not an Anatolian language and is most closely related linguistically to Greek, but with many non-Indo-European and Indo-Iranian areal influences. Armenian may have arrived in its current location shortly after the fall of the Hittite empire in a folk migration from Western Anatolia, the Aegean, or the Balkans. It is sometimes associated with the Phrygians.

Post-Script: The Tocharians

[This fragment not about the Hittites is also salvaged from this old post.]

The oldest mummies in the Tarim basin of what is now Ugygur China (i.e. in the Xinjiang Uyghur Autonomous Region) in the far Northeast of modern China also date to 1800 BCE.

Pliny the Elder, in Rome, recounts a first century CE report from an ambassador to China from Ceylon who later served as an ambassador to the Roman empire, that corroborates the existence of people with this appearance and a language unlike those known locally.

Monday, February 16, 2015

Quark Masses Matter

Most of the mass in hadrons formed only by up, down and strange quarks comes from the binding energy of their gluon fields, and not from the rest mass of the quarks themselves.

But, that doesn't mean that the quark masses don't matter. Lattice QCD studies of a model in which pions have a mass of 300 MeV rather than 135-139 MeV, which imply heavier quark masses than those present in reality, leads to QCD behavior very different from what is observed in real life.

For example, if up and down quarks were heavier than they are, particles make of two neutrons and no protons, would be stable, something that isn't the case in the real world.

Thus, binding energy in hadrons depends upon quark masses in a quite sensitive and non-linear way.

ATLAS Excludes CP-Odd Higgs At 220-1000 GeV

In models with two (or more) Higgs doublets, there are five or more Higgs bosons, rather than one as in the Standard Model. The four extra Higgs bosons are customarily called H+, H-, A and h (or H), with H and h being CP-Even Higgs one heavier and one lighter, and A being a CP-Odd Higgs boson.

The A is excluded with 95% confidence at masses from 220 GeV to 1000 GeV by existing LHC measurements by the ATLAS experiment. Two Higgs doublets are generically present in all SUSY models and in many other non-SUSY models beyond the Standard Model as well.

The 125 GeV Higgs boson is CP-Even as expected.

Meanwhile, the CMS experiment has produced more SUSY exclusions, because there is still not any sign of supersymmetry at the LHC.

Previous discussions of the theoretical and experimental barriers to two Higgs doublet models also dis favor the model, but a two Higgs doublet model has been proposed to explain multiple modest anomalies in LHC data.

The A is excluded with 95% confidence at masses from 220 GeV to 1000 GeV by existing LHC measurements by the ATLAS experiment. Two Higgs doublets are generically present in all SUSY models and in many other non-SUSY models beyond the Standard Model as well.

The 125 GeV Higgs boson is CP-Even as expected.

Meanwhile, the CMS experiment has produced more SUSY exclusions, because there is still not any sign of supersymmetry at the LHC.

Previous discussions of the theoretical and experimental barriers to two Higgs doublet models also dis favor the model, but a two Higgs doublet model has been proposed to explain multiple modest anomalies in LHC data.

Wednesday, February 11, 2015

Reich Paper Offers Wealth Of European Ancient DNA

A pre-print of a new paper by Reich, et al., offers a wealth of new ancient DNA information for Europe, including Y-DNA, mtDNA and autosomal DNA from the Mesolithic era through the late Bronze Age.

Most eagerly awaited is the new Y-DNA data (internal citations omitted):

Conventional wisdom had expected that the Yamnaya people were R1a-M417 bearing men who gave rise to the Corded Ware culture that produced the Y-DNA R1a predominance seen in Central and Eastern Europe today. The genetic evidence tends to favor a NE European rather than SE European proximate source of R1a in Central Europe.

Karelia where the Mesolithic R1a sample was found is in modern day Russia just to the east of Finland.

The autosomal DNA data also provides new insights but is not so easily summarized. Some notable observations: