Tuesday, February 22, 2022

The State of The Harappan Seals Debate

How Southern China Became Chinese

We know a fair amount about the process by which Southern China adopted Han Chinese culture, both in terms of timing and process, because its historical records are quite old. The process in this case was atypical of more familiar historical cases of conquest.

This vignette also illustrates a recurring theme that I try to note: The world in the past was more different from the present than we realize. The Han Chinese cultural core of all of China today, was not always so monolithic, even in the historic era.

In Confucius’s day, some 2,500 years ago, very few Chinese lived south of the Yangtze River. Most lived in the North, where Chinese culture and civilization had begun. Back then, the southern part of what is now China belonged to other nationalities, which the Chinese referred to collectively as the Mán, or ‘Southern Barbarians.’ These non-Han people appeared strange and wild to the Chinese, and for Confucius and his contemporaries. . . .Nevertheless, Han Chinese naturally began to migrate into those southern territories . . . a technologically superior people pioneered and colonized an enormous territory, overwhelming scattered and disparate native cultures in the process. . . . the Chinese movement south, by even the most conservative reckoning, took well over a millennium. . . .

In China . . . non-Han peoples and cultures were not so much erased as slowly absorbed. Groups in South China in contact with Han immigrants gave up their original ways of life and became Chinese. They took up Chinese dress, customs, language. They gave up native names in favor of Chinese ones. . . . . Han Chinese immigrants tended to settle in native villages and mingle with people already living there. Men married local women and brought up their children as Han Chinese. Even Han military colonies were not always segregated! South China thus became Chinese gradually through the process of absorption, and the language and culture of the dominant people—the Han Chinese from the North—became the language and culture of all.

From Language Log (the comments note a few instances of linguistic and culinary borrowings from the Southern Chinese people by the Han culture).

Ancient Pict DNA

Renaissance Astronomy

[I]t was painted by the famous Salamancan painter Fernando Gallego (1440-1507), who belonged to the Hispano-Flemish Gothic School. However, the “Sky of Salamanca”, both for its subject matter, analogous to that of certain Italian works of the same period, and for its grandiose proportions — it is a quarter of a sphere 8.70 metres in diameter —, can be considered . . . . a precedent of the Renaissance style.

The abstract of a new paper, and it citation, are as follows:

"El Cielo de Salamanca" ("The Sky of Salamanca") is a quarter-sphere-shaped vault 8.70 metres in diameter. It was painted sometime between 1480 and 1493 and shows five zodiacal constellations, three boreal and six austral. The Sun and Mercury are also represented. It formed part of a three times larger vault depicting the 48 Ptolemaic constellations and the rest of the planets known at the time. This was a splendid work of art that covered the ceiling of the first library of the University of Salamanca, one of the oldest in Europe having obtained its royal charter in 1218. But it was also a pioneering scientific work: a planetarium used to teach astronomy, the first of its kind in the history of Astronomy that has come down to us in the preserved part that we now call "The Sky of Salamanca".

We describe the scientific context surrounding the chair of Astrology founded around 1460 at the University of Salamanca, which led to the production of this unique scientific work of art and to the flourishing of Astronomy in Salamanca. We analyse the possible dates compatible with it, showing that they are extremely infrequent. In the period of 1100 years from 1200 to 2300 that we studied there are only 23 years that have feasible days. We conclude that the information contained in "El Cielo de Salamanca" is not sufficient to assign it to a specific date but rather to an interval of several days that circumstantial evidence seems to place in August 1475. The same configuration of the sky will be observable, for the first time in 141 years, from the 22nd to the 25th of August 2022. The next occasion to observe it live will be in 2060.

Friday, February 18, 2022

Proton Radius Problem Still Solved

The charge radius of the proton (i.e. the radius of the proton measured from its electromagnetic properties) was measured in muonic hydrogen (i.e. a proton with an associated muon instead of an electron) and was found to be about 0.842 fm, a result 6.9 sigma (about 4%) smaller than the 0.878 fm measurement made with ordinary hydrogen that was the state of the art global average measurement of the proton charge radius as of the year 2010 which was the norm at the time of the muonic hydrogen measurement.

Later experiments found that measurements of the proton radius in ordinary hydrogen, conducted with improved methods, confirmed that the proton radius in ordinary hydrogen was consistent with that in muonic hydrogen, as the Standard Model would lead us to expect. The discrepancy had been due to errors in the older measurement of the charge radius of the proton in ordinary hydrogen which had been underestimated. As I explained in 2019:

In a September 19, 2018 post at this blog (about a year ago), entitled "Proton radius problem solved?", I stated:

This would solve one of the major unsolved problems of physics (I have previously put this problem in the top twelve experimental data points needed in physics), called the "proton radius problem" or "muonic hydrogen problem" in which the radius of a proton in a proton-muon system appeared to be 4% smaller than measurements of the radius of a proton in ordinary hydrogen, contrary to the Standard Model. See previous substantive posts on the topic at this blog which can be found on November 5, 2013, April 1, 2013, January 25, 2013, September 6, 2011, and August 2, 2011.Previously most measurements in ordinary hydrogen favored a larger value for the radius of a proton (the global average of these measurement was 0.8775(51) fm), while the new high precision and more direct measurement in muonic hydrogen found a smaller value for the radius of a proton of 0.84184(67) fm. Now, a new electron measurement devised to rigorously confirm or deny the apparent discrepancy measured a result that agrees with the muon-based measurements.

A new analysis of experimental evidence (also here) from ordinary hydrogen has likewise confirmed that the proton charge radius in ordinary hydrogen is the same as it is in muonic hydrogen.

Galaxy AGC 114905 Probably Isn't That Weird After All

In a nutshell, a recent observation of what appeared to be an outlier isolated ultra-diffuse galaxy with no dark matter phenomena was probably due to an underestimation of the systemic error they experienced in estimating its inclination relative to Earth from which we are observing it. It probably isn't a weird outlier after all.

A recent paper blogged here found that ultra-diffuse galaxy AGC 114905 appeared to show no signs of dark matter or MOND effects, despite the fact that MOND should have applied since nothing else was close enough to provide an external field effect, and despite the fact that there was no obvious mechanism in a dark matter particle based analysis by which the galaxy could have experienced tidal stripping of dark matter that left it with only stars and interstellar gas.

An analysis of the result by Stacy McGaugh, a leading MOND proponent among astronomers, also blogged here, argued that systemic error in one of the measurements made was greatly underestimated and probably explained the result.

A new paper by J.A. Sellwood and R.H. Sanders, which will be published in the same journal that published the original results, also strongly suggests that the same systemic error which McGaugh highlighted as likely being underestimated and which the authors of the original paper flagged as the biggest factor that could impact their "no dark matter" phenomena hypothesis is probably the explanation of this outlier result. Sellwood and Sanders note that in a hypothesis without dark matter or MOND and with the margin of systemic error in determining the inclination of the galaxy relative to an Earth observer in the original paper, that AGC 114905 would be unstable and fly apart, despite showing no other signs of being an unstable system.

The end result affirms that existing paradigms and also represents the scientific process in which scientists critique other scientists until a satisfactory conclusion is reached to explain what we observe.

The paper and its abstract are as follows:

Recent 21 cm line observations of the ultra-diffuse galaxy AGC~114905 indicate a rotating disc largely supported against gravity by orbital motion, as usual. Remarkably, this study has revealed that the form and amplitude of the HI rotation curve is completely accounted for by the observed distribution of baryonic matter, stars and neutral gas, implying that no dark halo is required. It is surprising to find a DM-free galaxy for a number of reasons, one being that a bare Newtonian disk having low velocity dispersion would be expected to be unstable to both axi- and non-axisymmetric perturbations that would change the structure of the disc on a dynamical timescale, as has been known for decades.

We present N-body simulations of the DM-free model, and one having a low-density DM halo, that confirm this expectation: the disc is chronically unstable to just such instabilities. Since it is unlikely that a galaxy that is observed to have a near-regular velocity pattern would be unstable, our finding calls into question the suggestion that the galaxy may lack, or have little, dark matter.

We also show that if the inclination of this near face-on system has been substantially overestimated, the consequent increased amplitude of the rotation curve would accommodate a halo massive enough for the galaxy to be stable.

Wednesday, February 9, 2022

What's New In New World Prehistory?

Raff skillfully reveals how well-dated archaeological sites, including recently announced 22,000-year-old human footprints from White Sands, N.M., are at odds with the Clovis first hypothesis. She builds a persuasive case with both archaeological and genetic evidence that the path to the Americas was coastal (the Kelp Highway hypothesis) rather than inland, and that Beringia was not a bridge but a homeland — twice the size of Texas — inhabited for millenniums by the ancestors of the First Peoples of the Americas.

She eviscerates claims of “lost civilizations” founded on the racist assumption that Indigenous people weren’t sophisticated enough to construct large, animal-shaped or pyramidal mounds and therefore couldn’t have been the first people on the continent.

In fact, the Americas have a number of "lost civilizations" of collapsed civilizations, albeit home grown and not due to European or Asian influences or to "ancient aliens".

There was a Neolithic civilization in the Amazon that collapsed. The Cahokian culture was an empire that rose and fell, as did the empires of the Mayans, the Aztecs, the glyph building culture of the South American highlands, and the Ancient Puebloan culture, for example.

There is nothing racist about the conclusion that these relatively sophisticated indigenous cultures reached a point from which there was technological and cultural regression for a long period of time causing those cultures to be "lost civilizations" in a fair sense of that phrase.

Thursday, February 3, 2022

We Still Don't Really Understand Scalar Mesons

From the introduction and conclusion of a new short preprint (abstract here) from the STAR collaboration:

Searching for exotic state particles and studying their properties have furthered our understanding of quantum chromodynamics (QCD). Currently the structure and quark content of f(0)(980) are unknown with several predictions being a qq state, a qqqq state, a KK molecule state, or a gluonium state. In contrast to the vector and tensor mesons, the identification of the scalar mesons is a long-standing puzzle. Previous preliminary experimental measurements on the yield of f(0)(980) at RHIC and theoretical calculation suggest that it could be a KK stat[e]. In this analysis, the empirical number of constituent quark (NCQ) scaling is used to investigate the constituent quark content of f0(980). . . .The extracted quark content of f(0)(980) is n(q) = 3.0 ± 0.7 (stat) ± 0.5 (syst). More data are needed to understand whether f(0)(980) is a qq, qqqq, KK molecule, gluonium state, or produced through ππ coalescence.

In other words, we still really have no idea what a well established common, light scalar meson is made out of, despite decades of experiments and theoretical work by many independent collaborations and researchers. But, the authors somewhat favor a KK (kaon-kaon) state. As I note below, this is a sensible suggestion.

What Makes Sense?

Of the leading possibilities identified in the preprint, two of them, the KK molecule, and a qqqq state with a strange quark component, seem to make the most sense as an explanation for the f(0)(980) scalar meson.

A process of elimination analysis to reach that conclusion is as follows.

Not Three Valence Quarks

The "best fit" value of the study, by the way, with three valence quarks (with a combined uncertainty of ± 0.86), can't be correct.

A hadron with three valence quarks couldn't have spin-0 as the f(0)(980) does, although it could have either two or four quarks, which the results do not favor one way or the other.

But, this preprint tends to disfavor either zero valence quarks, or a hexquark interpretation.

Not Gluonium a.k.a. a Glueball

The three quark result therefore disfavors pure gluonium state (with zero valence quarks). Also a glueball with the quantum number properties of this light scalar meson is predicted by QCD calculations to have a mass of about 1700 MeV, a value inconsistent with the 980 MeV of the f(0)(980), even allowing for a very significant uncertainty in this QCD calculation which is particularly prone to be accurate since fewer physical constant uncertainties are involved and there is a high degree of symmetry. In contrast, another observed scalar meson, the f(0)(1710) is a much better candidate for a pure or nearly pure glueball.

No Charm, Bottom Or Top Quark Component

The f(0)(980) can't have charm quark or bottom quark components because one such quark is much more massive than the meson as a whole, and can't have a top quark component because top quarks don't hadronize.

The Trouble With A Strangeless qq State

The trouble with a qq state without a strange quark component is that it isn't obvious why it would be so much more massive than a neutral pion qq state with no strange quarks, which has a mass of about 135.0 GeV. A charge pion mass is 139.6 MeV (2 times which is 279.4 MeV), so even a ππ molecule should have enough binding energy to approach 980 MeV.

But pions are the only known mesons with a known valence quark composition without a strange quark component.

Also, there is the f(0)(500) scalar meson out there as well, the would be a more likely candidate for a ππ molecule or for a tetraquark with only up and/or down quarks and antiquarks as valence quarks than the f(0)(980).

The Trouble With Three Or More Pions

A ππ coalescence involving more than two pions would have six or more valence quarks, inconsistent with this paper's bottom line conclusion that there are probably either two or four valence quarks.

The Trouble With Strangonium (An ss State)

The only qq state that would make sense in terms of the f(0)(980) scalar meson's mass is strangonium (made up of a strange quark and an anti-strange quark), something which isn't observed experimentally unblended with up and anti-up, as well as down and anti-down components mixed with it.

But, a pure strangonium meson ought to be a pseudoscalar meson rather than a scalar meson, if it existed (i.e. odd rather than even parity), since it is asymmetrical.

The eta prime meson, a pseudoscalar meson with an equal mix of a uu, dd, and ss state, has a mass of 957.8 MeV which is in the right ballpark for the f(0)(980) potentially to have a strange quark component.

The eta prime meson mass (and the kaon mass discussed below) also supports the intuition that a pure strangonium qq state, if it existed, might very well have a mass of more than 980 MeV, although this is not a very firm intuition.

The KK Molecule Possibility

A charged kaon (with an up quark and anti-strange quark or an anti-up quark and a strange quark) has a mass of 493.7 MeV (2 times which would be 987.4 MeV) and a neutral kaon (with a down quark and anti-strange quark or an anti-down quark and a strange quark) has a mass of 497.6 MeV (2 times which would be 995.2 MeV).

So, if a KK molecule had some negative binding energy, these could be in the right mass ballpark.

Both charged kaons and the "short" neutral kaon (which is usually observed blended with a "long" neutral kaon that does not decay to pions), like the f(0)(980), often decays to pions. A KK molecule is consistent with this paper's results, as it would also have four valence quarks. Officially the "short" kaon and "long" kaon are listed as having the same mass, but that is partially because the masses of the two states can't be determined experimentally in isolation from each other. If the "short" kaon were actually lighter than the "long" kaon, and an f(0)(980) were a molecule of a "short" neutral kaon and its antiparticle, that could be an even better fit.

A qqqq State With A Strange Quark Component

A tetraquark with the same quark composition as a KK molecule (i.e. a strange quark and an anti-strange quark, together with either an up quark and anti-up quark, or a down quark and an anti-down quark, or perhaps a mixture of both light quark anti-quark pairs), would also have the right number of valence quarks to be consistent with the paper's conclusion. And, the case for a tetraquark to have slightly less mass than a KK molecule is also very plausible.

See also my previous post from October 28, 2021.

Another Problem With The ΛCDM Standard Model Of Cosmology

Every time you turn around, it seems, a new problem with the ΛCDM Standard Model of Cosmology emerges. The latest is that it predicts far too few thin disk galaxies, which are the most common type of galaxies in the real world.

Any viable cosmological framework has to match the observed proportion of early- and late-type galaxies. In this contribution, we focus on the distribution of galaxy morphological types in the standard model of cosmology (Lambda cold dark matter, ΛCDM).

Using the latest state-of-the-art cosmological ΛCDM simulations known as Illustris, IllustrisTNG, and EAGLE, we calculate the intrinsic and sky-projected aspect ratio distribution of the stars in subhalos with stellar mass M∗>10^10M⊙ at redshift z=0. There is a significant deficit of intrinsically thin disk galaxies, which however comprise most of the locally observed galaxy population. Consequently, the sky-projected aspect ratio distribution produced by these ΛCDM simulations disagrees with the Galaxy And Mass Assembly (GAMA) survey and Sloan Digital Sky Survey at ≥12.52σ (TNG50-1) and ≥14.82σ (EAGLE50) confidence.

The deficit of intrinsically thin galaxies could be due to a much less hierarchical merger-driven build-up of observed galaxies than is given by the ΛCDM framework.

It might also arise from the implemented sub-grid models, or from the limited resolution of the above-mentioned hydrodynamical simulations. We estimate that an 85 times better mass resolution realization than TNG50-1 would reduce the tension with GAMA to the 5.58σ level.

Finally, we show that galaxies with fewer major mergers have a somewhat thinner aspect ratio distribution. Given also the high expected frequency of minor mergers in ΛCDM, the problem may be due to minor mergers.

In this case, the angular momentum problem could be alleviated in Milgromian dynamics (MOND) because of a reduced merger frequency arising from the absence of dynamical friction between extended dark matter halos.

Wednesday, February 2, 2022

Simple Dark Matter Models Don't Work

Galactic rotation curves exhibit a diverse range of inner slopes. Observational data indicates that explaining this diversity may require a mechanism that correlates a galaxy's surface brightness with the central-most region of its dark matter halo.

In this work, we compare several concrete models that capture the relevant physics required to explain the galaxy diversity problem. We focus specifically on a Self-Interacting Dark Matter (SIDM) model with an isothermal core and two Cold Dark Matter (CDM) models with/without baryonic feedback. Using rotation curves from 90 galaxies in the Spitzer Photometry and Accurate Rotation Curves (SPARC) catalog, we perform a comprehensive model comparison that addresses issues of statistical methodology from prior works. The best-fit halo models that we recover are consistent with the Planck concentration-mass relation as well as standard abundance matching relations.

We find that both the SIDM and feedback-affected CDM models are better than a CDM model with no feedback in explaining the rotation curves of low and high surface brightness galaxies in the sample. However, when compared to each other, there is no strong statistical preference for either the SIDM or the feedback-affected CDM halo model as the source of galaxy diversity in the SPARC catalog.

Galactic rotation curves have historically served as a powerful tool for understanding the distribution of dark and visible matter in the Universe. With recent catalogs of rotation curves that span a wide range of galaxy masses, we now have the unique opportunity to test the consistency of dark matter (DM) models across a broad population of galaxies. One important observation that must be accounted for by any DM model is the diversity of galactic rotation curves and their apparent correlation with the baryonic properties of a galaxy. In this work, we use the Spitzer Photometry & Accurate Rotation Curves (SPARC) catalog to critically assess the preference for models of collisionless Cold DM (CDM) with and without feedback, and for self-interacting DM (SIDM).The diversity problem is the simple observation that the rotation curves of spiral galaxies exhibit a diverse range of inner slopes. Specifically, high surface brightness galaxies typically have more steeply rising rotation curves than do their low surface brightness counterparts, even if both have similar halo masses. This behavior cannot easily be explained if halo formation is self-similar, for example if the halo is modeled using the standard Navarro-Frenk-White (NFW) profile. Within the CDM framework, two galaxies with similar halo masses will have comparable total stellar mass and concentration. If no feedback mechanism is invoked, this sets the properties of the DM profile, typically modeled as an NFW profile, such as its scale radius and overall normalization. The inner slope of the halo density is insensitive to baryons and is fixed at a constant value. In this scenario, therefore, the halo model has little flexibility to account for correlations with the surface brightness of the galaxy.

One way to address galaxy diversity within the CDM framework is to use baryonic feedback to inject energy into the halo and redistribute DM to form larger cores near the halo’s center. For example, repetitive star bursts have been demonstrated to effectively produce cores in the brightest dwarf galaxies. For less massive dwarfs, such feedback is not strong enough to efficiently remove a galaxy’s inner-most mass. For larger systems, the galaxies are so massive that feedback is insufficiently powerful to create coring and profiles similar to NFW are preserved. Baryonic feedback processes therefore link the properties of a galaxy’s DM density profile to its baryonic content, as needed to explain the galaxy diversity, and provide improved fits to rotation curve data.An alternate approach to address the galaxy diversity problem is to change the properties of the DM model itself. A well-known example is that of SIDM. If DM can self interact, heat transfer in the central regions of the halo is efficient, creating an isothermal region within the density profile. This central, isothermal profile is exponentially dependent on the baryonic potential of the galaxy. While a DM-dominated system will form a core, a baryon-dominated system will retain a more cuspy center, similar to that of an NFW profile. Thus, in the SIDM framework, the size of the core naturally correlates with the baryonic surface brightness of the galaxy, allowing for a mechanism that can account for galaxy diversity. Since the time-scale for forming the isothermal core is shorter than the time-scale for baryonic feedback, this mechanism is mostly insensitive to the details of feedback processes.

Tuesday, February 1, 2022

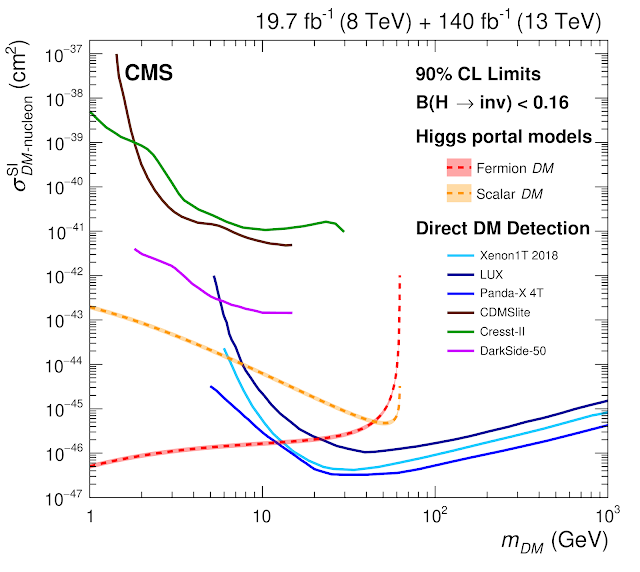

No Sign Of Higgs-Portal Dark Matter

The CMS experiment at the Large Hadron Collider (LHC) has found no evidence for Higgs boson decays into non-Standard Model particles that are "invisible" to its detectors such as dark matter (inferred from conservation of mass-energy and momentum in Higgs boson decays). The ATLAS experiment concurs in results it released in April of last year.

So, if dark matter exists, it probably does not get its mass via the Higgs mechanism the way that fundamental particles in the Standard Model (with the possible exception of neutrinos) do.

There is still a lot that the experiments conducted so far can't rule out in a model independent fashion, not because there is any sign that they are present, as because the measurements just aren't precise enough so far.

But, if a beyond the Standard Model particle had a coupling with the Higgs boson, called a Yukawa, proportional to its rest mass, as the other Standard Model particles do, this new particle would have a profound impact on the relative proportion of all of the decay products of a Higgs boson that are observed, which is largely a function of the mass of the Higgs boson, the masses of the Standard Model fundamental particles, and their spins (quarks and leptons have spin 1/2 and Standard Model fundamental bosons other than the Higgs boson have spin 1).

This plays out to produce the chart below for various hypothetical Higgs boson masses, which shows the percentage of decays which are of a particular type on the Y-axis and the mass of the Higgs boson on the X axis (with the real Higgs boson having a mass of about 125 GeV).

Several the decay channels of the Higgs boson have been observed and are tolerably close to the expected values in the chart above (specifically decays to ZZ, two photons, WW, bb, two tau leptons, and two muons). Lines don't appear for the decays of a Higgs boson to quark-antiquark pairs for strange, down and up quarks, to muon-antimuon pairs, or to electron-positron pairs, because such decays are expected to be profoundly more rare than the decays depicted due to their small rest masses.

So, while a Higgs boson could have up to 18% invisible decays that are unaccounted for in a model-independent analysis, the observed decays actually place far more strict limitations upon what couplings a "vanilla" new particle with the Higgs boson could have, and as a result, on what its Higgs field coupling derived mass could be. For example, if the new "invisible" particle had a mass of 2 GeV or more, it would profoundly throw off the branching fractions of b quarks, c quarks and tau leptons from the Higgs boson.

The exclusion is strongest for fermion (e.g. spin-1/2 or spin-3/2) dark matter or other beyond the Standard Model fermions that couple to the Higgs boson in the standard way in the mass range from significantly less than the charm quark rest mass (about 1.27 GeV) up to half of the Higgs boson mass (about 62.5 GeV).

It is also significant for spin-1 bosons with masses similar to the 80-90 GeV masses of the W and Z bosons, although even a light spin-1 boson that derived its mass from the Higgs field should have been enough to throw the other branching fractions off significantly.

But, the exclusion from Higgs boson decay data so far isn't particularly strong for fermion dark matter or other beyond the Standard Model fermions that couple to the Higgs boson in the standard way with masses of less than or equal to the mass of strange quarks and muons (i.e. less than about 100 MeV and probably significantly less given the dimuon channel decays identified by the CMS experiment) which would occur in less than 0.1% of Higgs boson decays.

The exclusion also isn't particularly strong for dark matter particles with more than half of the Higgs boson mass (i.e. more than 63 GeV), which would be mass-energy conservation prohibited in Higgs boson decay end states. But this exclusion on the high mass end of possible new particle masses is also conservatively low, because, particles more massive than that which couple to the Higgs field can still leave a detectible effect in collider experiments.

So, the LHC based exclusions for Higgs portal dark matter are powerful is disfavoring a supersymmetric WIMP candidate with a mass in the range of 1 GeV to 1000 GeV that direct detection experiments also powerfully disfavor.

But the LHC isn't very useful in ruling out fermion dark matter candidates that are electromagnetically neutral and don't interact via the strong force (which other experiments also strongly rule out over a much larger mass range), but do interact in the usual way with the Higgs field, that have masses of significantly less than 100 MeV, a mass range in which direct detection detection experiments (whose sensitivity falls off dramatically below 1 GeV) are also not very powerful at ruling out dark matter candidates.

For example, neither the LHC nor the direct detection experiments are helpful in ruling out a warm dark matter candidate with a mass in the 1-20 keV range favored by astronomy observations, even if it gets it mass from the Higgs field and has weak force interactions.

Also, the LHC provides no insight regarding, and can't confirm or deny the existence of, dark matter particle candidates that don't interact at all via any of the three Standard Model forces and have mass due to mechanisms other than their interactions with the Higgs field.

The source for this analysis above is as follows (via Sabine Hossenfelder's twitter feed):

The physicists in the CMS collaboration considered recently the complete data collected during the Run 2 operations of the LHC and extensively searched for presence of events corresponding to invisibly decaying Higgs boson. However there was no hint for the presence of any signal, only the known stuff, the backgrounds of the expected amounts! Too bad, indeed.The negative result, however, is very important for our efforts in understanding the properties of the Higgs boson. It showed that the Higgs boson cannot decay invisibly more often than in 18% of the cases. This number is actually quite large, when compared to the decay into, say a pair of photons which is easy to identify in the experiment, but occurs only about twice in thousand decays. The LHC experiments are looking forward eagerly to having more data to see if Nature does allow such "invisible" decays of the Higgs boson, but are simply more rare. Not the least at all, such results from the LHC are actually interpreted in terms of interaction probability of dark matter with ordinary matter. This depends on the mass of the dark matter particle and thus absence of "invisible" decay of the Higgs boson rules out the existence of such particles in a complementary way to the dedicated searches for dark matter in non-accelerator experiments.

Another Reason The Strong Force Doesn't Have CP Violation

Background: The Strong CP Problems

One of the "fake" unsolved problems of physics is why the strong force doesn't have charge parity (CP) violation like the weak force does, even though the equations of quantum chromodynamics that govern the strong force have an obvious place to put such a term, with the experimentally measured parameter of CP violation in the strong force commonly called "theta".

I call it a "fake" problem because the existence of theta that is exactly equal to zero doesn't cause any tensions with observational data or lead to inconsistencies in the equations. It is simply a case of Nature making a different choice about what its laws are than a few presumptuous theoretical physicists think it should have.

For some reason, according to an utterly counterproductive hypothesis called "naturalness" theoretical physicists seem to think that all possible dimensionless parameters of fundamental physics should have values with an order of magnitude similar to 1 and think it is "unnatural" when they don't, unless there is some good reason for this to not be the case. When an experimentally measured parameter has some other value (at least if it is non-zero) this parameter is called "fine-tuned" and is assumed to be a problem that needs to be explained somehow, usually with New Physics.

The introduction to the new paper discussed below recaps the strong CP problem as follows:

There are a couple of ways that people concerned about the "strong CP problem" imagine could resolve it.

One is that the issue would disappear if the up quark had a mass of zero, although experimental data fairly conclusively establish that up quarks have a non-zero mass.

Another is to add a beyond the Standard Model particle called "the axion" which is very, very low in mass (a tiny fraction of an electron-volt).

My "go to" explanation, in contrast, has been that since gluons are massless that they don't experience the passage of time. Thus, the strong force shouldn't have a parameter that is sensitive to the direction of time that its carrier boson does not experience, because CP violation is equivalent to saying that a process behaves differently going forward and backward in time.

Background: Confinement

One of the most characteristic observational aspects of the strong force is that quarks and gluons are always confined in composite structures known as hadrons, except (1) in the cases of top quarks which decay so quickly that they don't have time to hadronize (and are only produced in high energy particle collider environments), and (2) in quark-gluon plasma states which are produced in even more extremely high temperature systems. This observational reality is called "confinement."

Without confinement, our world would be profoundly different than it is today.

As it is, in our post-Big Bang era energies era, strong force phenomena are contained in many dozens of other kinds of hadrons.

In reality, the only hadrons that every arise in our low energy reality, however, because the others take immense energies to create and then decay rapidly, are protons (which are stable), neutrons (which are stable in bound atoms and last about fifteen minutes as free particles), and a few short-lived mesons (quark-antiquark bound structures) like the pion and kaon, which are carriers of the nuclear binding force between protons and neutrons in nuclei, but generally don't escape an individual atomic nucleus before decaying.

These light mesons also come up in a few other sub-collider energy contexts outside nuclear physics, for example, in cosmic rays, that slightly affect the normal workings of the universe in the post-Big Bang energies era of the universe. But, due to confinement, we never directly encounter the unmediated strong force in Nature (this is one of the reasons that it was the last force of nature to be discovered).

A New Solution To The Strong CP "Problem"

A new preprint uses lattice QCD methods to suggest another reason that there is no CP violation in the strong force, without a need to resort to axions, and that such axions should not exist.

It suggests that if theta were non-zero and there was CP violation in the strong force, that confinement wouldn't happen. Therefore, the theta term in the strong force equations must be zero, and the hypothetical axion cannot exist.

Three hard problems!

In this talk I investigate the long-distance properties of quantum chromodynamics in the presence of a topological theta term. This is done on the lattice, using the gradient flow to isolate the long-distance modes in the functional integral measure and tracing it over successive length scales.

It turns out that the color fields produced by quarks and gluons are screened, and confinement is lost, for vacuum angles theta > 0, thus providing a natural solution of the strong CP problem. This solution is compatible with recent lattice calculations of the electric dipole moment of the neutron, while it excludes the axion extension of the Standard Model.