The Past

Modern physics started a little more than a century ago in the early 1900s.

The state of physics immediately before that point is still taught in universities as a classical approximation of more fundamental physical laws found in modern physics which is often called "classical physics."

Classical physics consists of Newtonian mechanics and Newtonian gravity and calculus, dating to the late 1600s, Maxwell's equations of electromagnetism and classical optics, the laws of thermodynamics and their derivation from statistical mechanics, fluid mechanics, and the proton-neutron-electron model of the atom exemplified in the Periodic Table of the Elements.

Modern physics starts with a scientific understanding of radioactive decay, special relativity, general relativity, and quantum mechanics, which was developed between about 1896 and 1930. It also includes nuclear physics and neutrino physics, that begin in earnest in the 1930s, the Standard Model of Particle Physics and hadron physics that were formulated in the modern sense in the early 1970s, with the three generations of fermions established by 1975. Various beyond the Standard Model extensions of that model mostly date to the 1980s or later, even though the first hints of some of them were considered earlier. The penultimate Standard Model particle to be experimentally confirmed was the top quark in 1994. The fact that neutrinos were massive and oscillate was confirmed by 1998. All particles predicted by the Standard Model of Particle Physics were discovered by 2012 when the Higgs boson was discovered. Subsequent research has confirmed that the particle discovered is a good match to the Standard Model Higgs boson.

Some of the notable developments in Standard Model physics since 2012 have been the experimental exclusion of many extensions of the Standard Model over ever increasing ranges of energies and parameter spaces, the discovery of many hadrons predicted in the Standard Model including tetraquarks and pentaquark together with measurements of their properties, progress in calculating parton distribution functions from first principles, and refinement of our measurements of the couple dozen experimentally determined physical constants of the Standard Model.

Modern physics also includes modern astrophysics and cosmology including the Big Bang Theory and the concept of black holes which coincided with general relativity, dark matter phenomena first observed and neutron stars were first proposed in the 1930s, neutron stars were first observed in the 1967 and the first observation of a black hole was in 1971, cosmological inflation hypotheses date to 1980, the possibility of dark energy phenomena was part of general relativity but it wasn't confirmed until 1998, the LambdaCDM model of cosmology was proposed in the mid-1990s and became the paradigm when dark energy was observationally confirmed in 1998. Quantum gravity hypotheses and hypotheses to explain baryogenesis and leptogenesis have seen serious development mostly since 1980 although early hypotheses along these lines have been around since the inception of modern physics.

The Present

As of 2024, modern physics is dominated by the "core theories" of special relativity, general relativity, and the Standard Model of Particle Physics, none of which have been clearly contradicted by observational evidence after more than a century of looking in the case of relativity and half a century of the Standard Model which has been refined since its original scheme only to expand it to exactly three generation of fermions and to attempt to integrate massive neutrinos and neutrinos oscillation.

On one hand, there are many areas of wide consensus in modern physics. Special relativity has been exhaustively confirmed experimentally and observationally. The predictions of General Relativity including the Big Bang, black holes, strong field behavior in contexts like the dynamics of massive binary systems and compact objects, and its predictions like the precession of Mercury and frame dragging in the solar system context have been observationally confirmed to high precision. Half a century of high energy physics experiments and cosmic ray and neutron star observations have never definitively contradicted the Standard Model (apart from expanding it to exactly three generation of fermions and adding massive neutrinos) and have made myriad predictions to exquisite precision.

There are a variety of open questions and matters of ongoing investigation in modern physics, however.

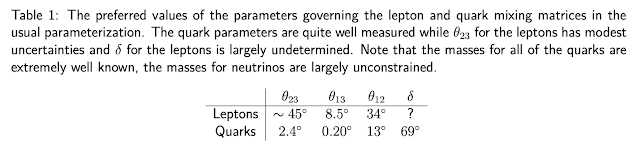

Two important phenomena predicted by the Standard Model: sphaleron interactions and glue balls (i.e. hadrons made entirely of gluons), have not yet been observed. We still don't know the absolute masses of the neutrino mass eigenstates or even if they have a "normal" or "inverted" mass hierarchy, the quadrant of one of the neutrino mass oscillation parameters, more than the vaguest estimate of the CP violating phase among the potential seven experimentally observed neutrino related physical constants, or the mechanism by which neutrino mass arises. We've seen patterns in the experimentally measured parameters of the Standard Model but have no solid theory to explain their values. While we can predict the "spectrum" of pseudoscalar and vector mesons and of three quark baryons with their properties for the most part, we are still struggling to explain the observed spectrum of scalar and axial vector mesons, we are in the early stage of working out the spectrum of possible four and five quark hadrons including both true four and five quark bound systems and "hadron molecules". And we can't even really predict, a priori, why the exact handful of light pseudoscalar and vector mesons are blends of valence quark combinations rather than individual particles although we can explain their structures with post-dictions. While in principle parton distribution functions can be calculated from first principles in the Standard Model, we've only managed to actually do that, somewhat crudely and in only a few special cases, in the last few years.

Investigation of just what triggers wave function collapse, how quantum entanglement works, the extent to which virtual particles and quantum tunneling must obey special and general relativity, and the correct "interpretation" of quantum mechanics is ongoing. At an engineering level, we are in the very early days of developing quantum computers.

We have a good working model of the residual strong force that binds nucleons in an atom, but have not derived all of nuclear physics or even the residual strong force, from the first principles of quantum chromodynamics (QCD), the Standard Model theory of the strong force. While we understand the principles behind sustainable nuclear fusion power generation, we don't have the engineering realization of it quite worked out. We have mapped out the periodic table of the elements and isotopes all of the way to quite ephemeral elements and isotopes that are only created synthetically, but we can't confidently predict whether or not there are as yet undiscovered elements that are in an island of stability. We are on the brink of mastering condensed matter physics issues like how to create high temperature superconductors and the structure and equation of state of neutron stars.

The search for deviations from the Standard Model in high energy physics has been relentless, particularly since 1994, when all Standard Model particles except the top quark had been discovered. Mostly this has been a tale of crushed dreams. Experiments have largely ruled out huge portions of the parameter space of supersymmetry, multiple Higgs doublet theories, technicolor, leptoquarks, preon theories, fourth or greater generation fermion theories, all manner of grand unified theories of particle physics, proton decay, neutron-antineutron oscillation, flavor changing neutral currents at the tree level, neutrinoless double beta decay or other affirmative evidence for Majorana neutrinos, lepton flavor violation, lepton unitarity violations, non-standard neutrino interactions, and sterile neutrinos. No dark matter candidates have been observed experimentally. We've even observationally ruled out changes in many of its fundamental constants for a period looking back of many billions of years.

There have been some statistically significant, but tiny, discrepancies between the experimentally measured value of the anomalous magnetic moment of the muon and the value predicted by the Standard Model, although this increasingly looks like it is a function of erroneous calculations of the predicted value rather than evidence of new physics. While most experimental anomalies suggesting new particles have been ruled out there have been some very weak experimental hints of a 17 MeV particle (X17) whose alleged experimental hints may have other explanations, and an electromagnetically neutral second scalar Higgs boson at about 95 GeV, neither of which have been fully ruled out, but neither of which is likely to amount to anything, and their have been weak experimental hints, that are to some extent mutually inconsistent between different experiments of one or two possible "sterile neutrinos" (which could also be a dark matter candidate). The anomalous magnetic moment of the electron measured experimentally isn't a perfect fit to its theoretically predicted value although it is very close. The experimentally measured couplings of the Standard Model Higgs boson aren't a perfect fit to the theoretical predictions although they are reasonably close to within experimental uncertainties. While early hints of lepton universality violations were ruled out when the experimental data improved, there are still some minor lepton flavor ratio anomalies out there. Still, there is every reason to think that these anomalies won't last and that the particle content of the Standard Model is complete with the possible exceptions of one or more particles involved in the mechanism that generates neutrino masses, a massless or nearly massless graviton, and one or more dark matter candidates with properties that make them almost impossible to detect in a particle collider.

Thus, while there are a few details and implementations left to work out, the Standard Model, high energy physics, and nuclear physics are close to being complete and we don't expect any new discoveries in this area to identify anything deeply wrong with what we know now, even though if we are really lucky, more research and greater precision might grant us a deeper understanding of why the Standard Model has the properties that it does.

The situation in astrophysics and cosmology is much less settled, and while the handful of particle colliders on the planet provide us with a trickle of new high energy physics data in some very narrow extensions of existing high energy physics parameter space, a host of new "telescopes" in the broad sense of the term is providing torrents of new astronomy observations that our existing modern physics theories struggle to explain. The leading paradigm in astrophysics and cosmology, the LambdaCDM "Standard Model of Cosmology" is a dead man walking, mostly for lack of a consensus on what to replace it with.

"Telescopes" aren't just visual light telescopes on the surface of the Earth anymore, and even those are vastly improved. Modern "telescopes" see the entire range of the electromagnetic spectrum from ultra-low frequency radio waves to ultra-high energy gamma waves, not just from Earth but also based in space, with extreme resolutions. We have neutrino "telescopes". We have "cosmic ray detectors" which observe non-photon particles that rain down on Earth from space. We have gravitational wave "telescopes" that have made many important discoveries, including the observation of many intermediate size black holes. In some cases we can do "multi-messenger astronomy" which combined signals from multiple kinds of telescopes that come from a single event (something that has places strong bounds on both the speed of neutrinos and the speed of gravitational waves, which have both been as predicted by special and general relativity).

We have overwhelming observational evidence of dark matter phenomena. But we have no dark matter particle candidate and no gravity based explanation for dark matter phenomena that fits all of the data and has secured wide acceptance, although we have an ever filling cemetery of ruled out explanations for dark matter, like MACHOs, primordial black holes, supersymmetric WIMPs, and cold dark matter particles that form NFW halos. Attempts to explain dark matter through the gravitomagnetic effects of general relativity in galaxies have also failed. And there are dozens of distinct types of observations contrary to the predictions of LambdaCDM. Fairly sophisticated comparisons of predictions with observations strong disfavor warm dark matter candidates and thermal relic GeV mass scale self-interacting dark matter candidates. QCD axions and QCD bound exotic hadrons are also largely ruled out. Toy model MOND can't be the exclusive explanation for dark matter phenomena, and neither can some of its relativistic generalizations like TeVeS, although MOND is a very simple theory that explains almost all dark matter phenomena observed up to the galaxy scale with a single new parameter and has with some mild generalizations explained the cosmic radio background (CMB) observations and the impossible early galaxies problem. But while MOND may be on the right track it doesn't quite get many features of galaxy clusters right (although a similar scaling law can work there) and has trouble with some out of disk plane feature of spiral galaxies. The data on wide binaries, which would distinguish between a variety of dark matter particle and gravitational based theories to explain dark matter phenomena are current inconclusive and have received contradictory interpretation. A variety of gravitational explanations of dark matter phenomena are promising, however, as are dark matter particle theories with extreme low mass dark matter candidates like axion-like particles which have wave-like properties.

We have inconsistent measurements of the Hubble constant which is one of two observations that contribute to our estimate of the amount of dark energy in a simple LambdaCDM model where dark energy is simply a specific constant value of the cosmological constant suggesting that the cosmological constant, lambda, may not actually be constant.

The allowed parameter space for cosmological inflation continues to narrow with the non-detection of primordial gravitational waves at ever greater precisions. There are strong, but not conclusive suggestions that the universe may be anisotropic and inhomogeneous even at the largest scales, contrary to the prevailing cosmology paradigm.

There are no widely accepted satisfactory answer to the question of baryogenesis and leptogenesis, although improvements in the precision and energy scale reach of the Standard Model and improved astronomy observations increasingly push any meaningful baryogenesis and leptogenesis closer to the Big Bang, with any significant changes in the aggregate baryon number and lepton number of the universe pretty much pushed to the first microsecond after the Big Bang at this point.

None of the unknowns in astrophysics and cosmology have any real practical engineering implications. But the Overton window of possibilities that are being seriously investigated in these fields is vastly broader than in high energy physics or quantum mechanics.

The Future

The good news is that the torrent of astronomy data that is pouring in from many independent research groups is providing us with the data we need to more definitively rule out or confirm various competing hypotheses in astrophysics and cosmology. We have a lot of shiny new tools both in the form in many different kinds of vastly improved "telescopes" and in the form of profoundly improved computational power and artificial intelligence tools to analyze this vast amount of new data to allow us to have scientific advances in these fields which are not just driven by observations and not group think or the sociology of the discipline, even though it may take the deaths of a generation or two of astrophysicists for the field to fully free itself from outdated ideas that are no longer supported by the data.

Even if we don't reach a consensus around a new observationally supported and theoretically consistent paradigm in my lifetime of two or three more decades, I am very hopeful that we will do so within the lives of my children and of my grandchildren to be.

I have strong suspicions about what the new paradigm will look like.

Dark matter phenomena will be explained most likely by a gravitational explanation with strong similarities to work of Alexandre Deur, whether or not his precise attempt to derive his conclusions from non-perturbative general relativity effects holds up. The consensus will also probably likely be that quantum gravity does exist, although it may take quite a while to prove that with observations.

Also, like Deur, and unlike the vast majority of other explanations, I think that the ultimate explanation for dark energy phenomena will conserve mass-energy both globally and locally and will not be a true physical constant. The cosmological constant of general relativity will probably ultimately be abandoned even though it is a reasonable first order approximation of what we observe. This will also mean that the aggregate mass-energy of the universe at any given time will be finite and conserved; it will be a non-zero boundary condition at t=0 of the Big Bang.

Thus, I suspect that a century from now, the consensus will be that there are no dark matter particles and there is no dark energy substances, and that these were just products of theoretical misconceptions akin the the epicycles to explain celestial mechanics that preceded the discovery that their motions could be explained to all precision available at the time with Newtonian gravity.

It will take time, but I expect that cosmological inflation will ultimately be ruled out.

I expect that new advances in astrophysics will rule out faster than light travel or information transfer and wormholes.

I don't think that we will have a conclusive explanation of baryogenesis and leptogenesis, even within my grandchildren's lives, but I do think that a mirror universe hypothesis that there is an antimatter universe that exists before the Big Bang in which time runs in the opposite direction will become one of the leading explanations. This is because I think that we will make significantly more progress towards ruling out new high energy physics and any possibility of matter creation in periods closer and closer to the Big Bang. This will make non-zero baryon number and lepton number in increasingly short time frames immediately after, although not at, the Big Bang impossible.

I think that there are even odds that we will discover that sphaleron interactions are actually physically impossible in any physical possible scenario doesn't their theoretical possibility in the Standard Model, possibly due to a maximum local mass-energy density.

In the area of high energy physics, I don't expect any new particles to be discovered (apart from possible evidence for the existence of a massless graviton), and I expect the Standard Model to stand the test of time. I do expect some refinements of our theories of wave function collapse and quantum interpretations. I don't expect new high energy physics at higher energies. I don't expect that we will find new heavy elements in islands of stability.

I expect that neutrinos will be found to have Dirac rather than Majorana mass that is somehow made possible without new particles despite the current lack of a clear path to do so. Most likely, our understanding of neutrino mass and of the Higgs field Yukawas of the Standard Model particles will involve a dynamical balancing of the Higgs vev between particles that can transform into each other in W boson interactions via W boson interactions, as opposed to giving such a central role to the coupling of these particles to a Higgs boson. Self-interactions with the fields to which the various fundamental particles couple will also play a role in generating their masses.

I expect that, as our measurements of the fundamental particle masses get more precise, that the sum of the square of the masses of the fundamental particles will indeed be found to equal the square of the Higgs vev. Vast amounts of ink will be spilled once this is confirmed, over why fundamental boson masses give rise to slightly more than half of the Higgs vev, while fundamental fermions give rise to slightly less than half of it. This could reduce the number of free independently experimentally measured mass and coupling constant parameters of the Standard Model from eighteen (fifteen masses and three coupling constants) minus one for the electroweak relationship between the W and Z boson masses and coupling constants, to eight or fewer (three coupling constants, the W, two fermion masses, and two lepton masses).

I expect that we will have a good first principles explanation of the full spectrum of observed hadrons and that we will have first principles calculations of all of their parton distribution functions.

I expect that we will be able to work out the residual strong force that binds nucleons exactly from first principles, and that we will be able to calculate with quantum computers that there are no undiscovered islands of stability in heavy elements or isotopes.

There are even odd that we will discover a deeper explanation for the values of the parameters in the CKM and PMNS matrixes by sometime in my grandchildren's lives, and better than even odds that we will be able to reduce the number of free parameters in those two matrixes to less than the current eight. I wouldn't be surprised if the CP violating parameters of the CKM and PMNS matrixes were found to have an independent source from the other six parameters of those matrixes.

I don't think that we will develop a Lie group Grand Unified Theory or Theory of Everything, or that string theory will ever work, although I do think that we are more likely than not to develop a theory of quantum gravity that can be integrated into the Standard Model more cleanly. Indeed, gravitationally based dark matter phenomena may turn out to be a quantum gravity effect that is actually absent from classical general relativity.