Wide binary stars are used to test the modified gravity called Scalar-Tensor-Vector Gravity or MOG. This theory is based on the additional gravitational degrees of freedom, the scalar field G=GN(1+α), where GN is Newton's constant, and the massive (spin-1 graviton) vector field ϕμ. The wide binaries have separations of 2-30 kAU. The MOG acceleration law, derived from the MOG field equations and equations of motion of a massive test particle for weak gravitational fields, depends on the enhanced gravitational constant G=GN(1+α) and the effective running mass μ. The magnitude of α depends on the physical length scale or averaging scale ℓ of the system. The modified MOG acceleration law for weak gravitational fields predicts that for the solar system and for the wide binary star systems gravitational dynamics follows Newton's law.

Thursday, November 30, 2023

Wide Binaries Are Basically Newtonian in Moffat's MOG Theory

Monday, November 20, 2023

A Newly Discovered Milky Way Satellite Star Cluster

Wednesday, November 15, 2023

Are Sunspots Driven By The Gravitational Pull Of The Planets?

The sunspot number record covers over three centuries.These numbers measure the activity of the Sun. This activity follows the solar cycle of about eleven years.

In the dynamo-theory, the interaction between differential rotation and convection produces the solar magnetic field. On the surface of Sun, this field concentrates to the sunspots. The dynamo-theory predicts that the period, the amplitude and the phase of the solar cycle are stochastic.

Here we show that the solar cycle is deterministic, and connected to the orbital motions of the Earth and Jupiter. This planetary-influence theory allows us to model the whole sunspot record, as well as the near past and the near future of sunspot numbers. We may never be able to predict the exact times of exceptionally strong solar flares, like the catastrophic Carrington event in September 1859, but we can estimate when such events are more probable. Our results also indicate that during the next decades the Sun will no longer help us to cope with the climate change. The inability to find predictability in some phenomenon does not prove that this phenomenon itself is stochastic.

Friday, November 10, 2023

A Nifty New Telescope

Nestled in the mountains of Northern India, is a 4-metre rotating dish of liquid mercury. Over a 10-year period, the International Liquid Mirror Telescope (ILMT) will survey 117 square degrees of sky, to study the astrometric and photometric variability of all detected objects. . . .

A perfect reflective paraboloid represents the ideal reference surface for an optical device to focus a beam of parallel light rays to a single point. This is how astronomical mirrors form images of distant stars in their focal plane. In this context, it is amazing that the surface of a liquid rotating around a vertical axis takes the shape of a paraboloid under the constant pull of gravity and centrifugal acceleration, the latter growing stronger at distances further from the central axis. The parabolic surface occurs because a liquid always sets its surface perpendicular to the net acceleration it experiences, which in this case is increasingly tilted and enhanced with distance from the central axis. The focal length F is proportional to the gravity acceleration g and inversely proportional to the square of the angular velocity ω. In the case of the ILMT, the angular velocity ω is about 8 turns per minute, resulting in a focal length of about 8m. Given the action of the optical corrector, the effective focal length f of the D=4m telescope is about 9.44m, resulting in the widely open ratio f/D∼2.4. In the case of the ILMT, a thin rotating layer of mercury naturally focuses the light from a distant star at its focal point located at ∼8m just above the mirror, with the natural constraint that such a telescope always observes at the zenith.Thanks to the rotation of the Earth, the telescope scans a strip of sky centred at a declination equal to the latitude of the observatory (+29◦21′41.4′′ for the ARIES Devasthal observatory). The angular width of the strip is about 22′, a size limited by that of the detector (4k×4k) used in the focal plane of the telescope. Since the ILMT observes the same region of the sky night after night, it is possible either to co-add the images taken on different nights in order to improve the limiting magnitude or to subtract images taken on different nights to make a variability study of the corresponding strip of sky. Consequently, the ILMT is very well-suited to perform variability studies of the strip of sky it observes. While the ILMT mirror is rotating, the linear speed at its rim is about 5.6km/hr, i.e., the speed of a walking person.

A liquid mirror naturally flows to the precise shape paraboloid shape needed because it is a liquid under these conditions. And, since its surface is always dynamically readjusting itself to this shape, rather than being fixed in place just once as a solid mirror would be, any slight imperfections in its surface that do deviate from its paraboloid shape don't stay in exactly the same place. Instead, distortions from slight imperfections in the shape of the liquid mirror average out over multiple observations of the same part of the sky, to an average shape at any one location that is much closer to perfect than a solid mirror cast ultra-precisely just once. Thus, a liquid mirror reduces one subtle source of potential systemic errors that can arise from slight imperfections in the mirror's shape at particular locations that recur every time a particular part of the sky is viewed when a solid mirror is used.

A New Top Quark Pole Mass Analysis And A Few Wild Conjectures Considered

A new paper makes an re-analysis of existing data to determine the top quark pole mass. It comes up with:

which is consistent with, but at the low end of the range of the Particle Data Group's estimate based upon indirect cross-section measurements (bringing these into the same amount of precision as its direct measurements of the top quark mass):

The global LC&P relationship (i.e. that the sum of the squares of the fundamental SM particle masses is equal to the square of the Higgs vev, which is equivalent to saying that the sum of the SM particle Yukawas is exactly 1), which is about 0.5% less than the predicted value (2 sigma in top quark and Higgs boson mass, implying a theoretically expected top quark mass of 173,360 MeV and a theoretically expected Higgs boson mass of 125,590 MeV if the adjustments were proportioned to the experimental uncertainty in the LC&P relationship given the current global average measurements, in which about 70% of the uncertainty is from the top quark mass uncertainty and about 29% is from the Higgs boson mass uncertainty). . . .But, the LC&P relationship does not hold separately for fermions and bosons, which would be analogous in some ways to supersymmetry. This is only an approximate symmetry. The sum of the squares of the SM fundamental boson masses is more than half of the square of the Higgs vev by about 0.5% (3.5 sigma of Higgs boson mass, implying a theoretically expected Higgs boson mass of about 124,650 MeV), while the sum of the squares of the SM fundamental fermion masses is less than half of the square of the Higgs vev by about 1.5% (about 2.8 sigma of top quark mass, implying a theoretically expected top quark mass of about 173,610 MeV). The combined deviation from the LC&P relationship for both fermions and bosons independently is 4.5 sigma, which is a very strong tensions that is nearly conclusively ruled out. One wonders if the slightly bosonic leaning deviation from this symmetry between fundamental fermion masses and fundamental boson masses has some deeper meaning or source.

The result in this new paper, by largely corroborating direct measurements of the top quark mass at similar levels of precision, continues to favor a lower top quark mass than the one favored by LP&C expectations.

Earlier, lower energy Tevatron measurements of the top quark mass (where the top quark was discovered) supported higher values for the top quark mass than the combined data from either of the Large Hadron Collider (LHC) experiments do and was closely in line with the LP&C expectation.

But there is no good reason to think that both of the LHC experiments measuring the top quark mass have greatly understated the systemic uncertainties in their measurements (which combine measurements from multiple channels with overlapping but not identical sources of systemic uncertainty). Certainly, the LHC experiments have much larger numbers of top quark events to work with than the two Tevatron experiments measuring the top quark mass did, so the relatively low statistical uncertainties of the LHC measurements of the top quark mass are undeniably something that makes their measurements more precise relative to Tevatron.

Could This Hint At Missing Fundamental Particles?

If I were a phenomenologist prone to proposing new particles, I'd say that this close but not quite right fit to the LP&C hypothesis was a theoretical hint that the Standard Model might be missing one or more fundamental particles, probably fermions (which deviate most strongly from the expected values).

I'll explore some of those possibilities, because readers might find them to be interesting. But, to be clear, I am not prone to proposing new particles, indeed, my inclinations are quite the opposite. I don't actually think that any of these proposals is very well motivated.

In part, this is because I think that dark matter phenomena and dark energy phenomena are very likely to be gravitational issues rather than due to dark matter particles or dark energy bosonic scalar fields, I don't think we need need particles to serve that purpose. Dark matter phenomena would otherwise be the strong motivator for a beyond the Standard Model new fundamental particle.

I am being lenient in not pressing some of the more involved arguments from the data and theoretical structure of fundamental physics that argue against the existence of many of these proposed beyond the Standard Model fundamental particles.

This is so, even though the LP&C hypothesis is beautiful, plausible, and quite close to the experimental data, so it would be great to be able to salvage it somehow if the top quark mass and Higgs boson mass end up being close to or lower than their current best fit estimated values.

Beyond The Standard Model Fundamental Fermion Candidates

If the missing fermion were a singlet, LP&C would imply an upper bound on its mass of about 3 GeV.

This could be a good fit for a singlet sterile neutrino that gets its mass via the Higgs mechanism and then transfers its own mass to the three active neutrinos via a see-saw mechanism.

It could also be a good fit for a singlet spin-3/2 gravitino in a supersymmetry inspired model in which only the graviton, and not the ordinary Standard Model fermions and bosons have superpartners.

A largely sterile singlet gravitino, and a singlet sterile neutrino, have both been proposed as cold dark matter candidates and at masses under 3 GeV the bounds on their cross-sections of interaction (so that they could have some self-interaction or weak to feeble interaction with ordinary matter since purely sterile dark matter that only interacts via gravity isn't a good fit for the astronomy data) aren't as tightly constrained as heavier WIMP candidates. And, the constraints on a WIMP dark matter particle cross section of interaction from direct dark matter detection experiments gets even weaker fairly rapidly between 3 GeV and 1 GeV, which is what the LP&C conjecture would hint at if either the current best fit value for the top quark mass or the current best fit value for the Higgs boson mass were a bit light.

The LP&C conjecture isn't a useful hint towards (or against) a massive graviton, however, because the experimental bounds on those are on the order of 32 orders of magnitude or more too small to be discernible by that means.

If there were three generations of missing fermions, you'd expect them to have masses about two-thirds higher than the charged lepton masses, with the most massive one still close to 3 GeV, the second generation one at about 176 MeV, and the first generation one at about 0.8 MeV. But these masses could be smaller if the best fit values for the top quark mass and/or Higgs boson mass ended rising somewhat as the uncertainties in those measurements fall.

These masses for a missing fermion triplet might fit a leptoquark model of the kind that has been proposed to explain B meson decay anomalies. The experimental motivation for leptoquarks was stronger before the experimental data supporting violations of the Standard Model principle of charged lepton universality (i.e. that the three charged leptons have precisely the same properties except their masses) evaporated in the face of factors in the data analysis of the seemingly anomalous experimental results from B meson decays. But there are still some lesser and subtle anomalies in B meson decays that didn't go away with this improved data analysis that could motivate similar leptoquark models.

If there were two missing fermions of similar masses (or two missing fermion triplets with their sum of square masses dominated by the third-generation particle of each triplet), this would suggest a missing fundamental particle mass on the order of up to about 2 GeV each.

A model with two missing fermion triplets might make sense if there were two columns of missing leptoquarks, instead of one, just as there are two columns of quarks (up type and down type) and two columns of leptons (charged leptons and neutrinos), in the Standard Model.

Wednesday, November 8, 2023

More On Wide Binaries, MOND and Deur

We test Milgromian dynamics (MOND) using wide binary stars (WBs) with separations of 2−30 kAU. Locally, the WB orbital velocity in MOND should exceed the Newtonian prediction by ≈20% at asymptotically large separations given the Galactic external field effect (EFE).

We investigate this with a detailed statistical analysis of Gaia DR3 data on 8611 WBs within 250 pc of the Sun. Orbits are integrated in a rigorously calculated gravitational field that directly includes the EFE. We also allow line of sight contamination and undetected close binary companions to the stars in each WB. We interpolate between the Newtonian and Milgromian predictions using the parameter αgrav, with 0 indicating Newtonian gravity and 1 indicating MOND.

Directly comparing the best Newtonian and Milgromian models reveals that Newtonian dynamics is preferred at 19σ confidence. Using a complementary Markov Chain Monte Carlo analysis, we find that αgrav=−0.021+0.065−0.045, which is fully consistent with Newtonian gravity but excludes MOND at 16σ confidence. This is in line with the similar result of Pittordis and Sutherland using a somewhat different sample selection and less thoroughly explored population model.

We show that although our best-fitting model does not fully reproduce the observations, an overwhelmingly strong preference for Newtonian gravity remains in a considerable range of variations to our analysis.

Adapting the MOND interpolating function to explain this result would cause tension with rotation curve constraints. We discuss the broader implications of our results in light of other works, concluding that MOND must be substantially modified on small scales to account for local WBs.

The immense diversity of the galaxy population in the universe is believed to stem from their disparate merging histories, stochastic star formations, and multi-scale influences of filamentary environments. Any single initial condition of the early universe was never expected to explain alone how the galaxies formed and evolved to end up possessing such various traits as they have at the present epoch. However, several observational studies have revealed that the key physical properties of the observed galaxies in the local universe appeared to be regulated by one single factor, the identity of which has been shrouded in mystery up to date.

Here, we report on our success of identifying the single regulating factor as the degree of misalignments between the initial tidal field and protogalaxy inertia momentum tensors. The spin parameters, formation epochs, stellar-to-total mass ratios, stellar ages, sizes, colors, metallicities and specific heat energies of the galaxies from the IllustrisTNG suite of hydrodynamic simulations are all found to be almost linearly and strongly dependent on this initial condition, when the differences in galaxy total mass, environmental density and shear are controlled to vanish. The cosmological predispositions, if properly identified, turns out to be much more impactful on galaxy evolution than conventionally thought.

Monday, October 30, 2023

The Pre-Columbian Pacific Coast

A Global Map Of The Last Glacial Maximum (And Dingos)

The earliest known dingo remains, found in Western Australia, date to 3,450 years ago. Based on a comparison of modern dingoes with these early remains, dingo morphology has not changed over thousands of years. This suggests that no artificial selection has been applied over this period and that the dingo represents an early form of dog. They have lived, bred, and undergone natural selection in the wild, isolated from other dogs until the arrival of European settlers, resulting in a unique breed.In 2020, an MDNA study of ancient dog remains from the Yellow River and Yangtze River basins of southern China showed that most of the ancient dogs fell within haplogroup A1b, as do the Australian dingoes and the pre-colonial dogs of the Pacific, but in low frequency in China today. The specimen from the Tianluoshan archaeological site, Zhejiang province dates to 7,000 YBP (years before present) and is basal to the entire haplogroup A1b lineage. The dogs belonging to this haplogroup were once widely distributed in southern China, then dispersed through Southeast Asia into New Guinea and Oceania, but were replaced in China by dogs of other lineages 2,000 YBP.The oldest reliable date for dog remains found in mainland Southeast Asia is from Vietnam at 4,000 YBP, and in Island Southeast Asia from Timor-Leste at 3,000 YBP. In New Guinea, the earliest dog remains date to 2,500–2,300 YBP from Caution Bay near Port Moresby, but no ancient New Guinea singing dog remains have been found. The earliest dingo remains in the Torres Straits date to 2,100 YBP.

The earliest dingo skeletal remains in Australia are estimated at 3,450 YBP from the Mandura Caves on the Nullarbor Plain, south-eastern Western Australia; 3,320 YBP from Woombah Midden near Woombah, New South Wales; and 3,170 YBP from Fromme's Landing on the Murray River near Mannum, South Australia.

Dingo bone fragments were found in a rock shelter located at Mount Burr, South Australia, in a layer that was originally dated 7,000-8,500 YBP. Excavations later indicated that the levels had been disturbed, and the dingo remains "probably moved to an earlier level."

The dating of these early Australian dingo fossils led to the widely held belief that dingoes first arrived in Australia 4,000 YBP and then took 500 years to disperse around the continent. However, the timing of these skeletal remains was based on the dating of the sediments in which they were discovered, and not the specimens themselves.In 2018, the oldest skeletal bones from the Madura Caves were directly carbon dated between 3,348 and 3,081 YBP, providing firm evidence of the earliest dingo and that dingoes arrived later than had previously been proposed. The next-most reliable timing is based on desiccated flesh dated 2,200 YBP from Thylacine Hole, 110 km west of Eucla on the Nullarbor Plain, southeastern Western Australia. When dingoes first arrived, they would have been taken up by indigenous Australians, who then provided a network for their swift transfer around the continent. Based on the recorded distribution time for dogs across Tasmania and cats across Australia once indigenous Australians had acquired them, the dispersal of dingoes from their point of landing until they occupied continental Australia is proposed to have taken only 70 years. The red fox is estimated to have dispersed across the continent in only 60–80 years.At the end of the last glacial maximum and the associated rise in sea levels, Tasmania became separated from the Australian mainland 12,000 YBP, and New Guinea 6,500–8,500 YBP by the inundation of the Sahul Shelf. Fossil remains in Australia date to around 3,500 YBP and no dingo remains have been uncovered in Tasmania, so the dingo is estimated to have arrived in Australia at a time between 3,500 and 12,000 YBP. To reach Australia through Island Southeast Asia even at the lowest sea level of the last glacial maximum, a journey of at least 50 kilometres (31 mi) over open sea between ancient Sunda and Sahul was necessary, so they must have accompanied humans on boats.

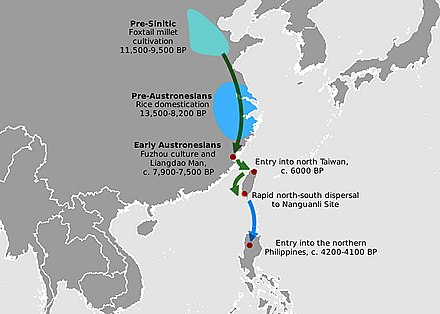

Some best estimates of Austronesian migration are as follows:

Wednesday, October 18, 2023

An Old Specimen Of Homo Erectus

With new paleomagnetic dating results and some description of recently excavated material, Mussi and coworkers show that levels D, E, and F are between 2.02 million and 1.95 million years old. Most interesting is that level D includes what is now the earliest Acheulean assemblage in the world, and level E has produced the partial jaw of a very young Homo erectus individual. The Garba IVE jaw is now one of two earliest H. erectus individuals known anywhere, in a virtual tie with the DNH 134 cranial vault from Drimolen, South Africa.

Tuesday, October 17, 2023

Modern Human Introgression Into Neanderthal DNA

Highlights

• Anatomically modern human-to-Neanderthal introgression occurred ∼250,000 years ago• ∼6% of the Altai Neanderthal genome was inherited from anatomically modern humans• Recent non-African admixture brought Neanderthal ancestry to some African groups• Modern human alleles were deleterious to NeanderthalsSummaryComparisons of Neanderthal genomes to anatomically modern human (AMH) genomes show a history of Neanderthal-to-AMH introgression stemming from interbreeding after the migration of AMHs from Africa to Eurasia.

All non-sub-Saharan African AMHs have genomic regions genetically similar to Neanderthals that descend from this introgression. Regions of the genome with Neanderthal similarities have also been identified in sub-Saharan African populations, but their origins have been unclear.

To better understand how these regions are distributed across sub-Saharan Africa, the source of their origin, and what their distribution within the genome tells us about early AMH and Neanderthal evolution, we analyzed a dataset of high-coverage, whole-genome sequences from 180 individuals from 12 diverse sub-Saharan African populations.

In sub-Saharan African populations with non-sub-Saharan African ancestry, as much as 1% of their genomes can be attributed to Neanderthal sequence introduced by recent migration, and subsequent admixture, of AMH populations originating from the Levant and North Africa.

However, most Neanderthal homologous regions in sub-Saharan African populations originate from migration of AMH populations from Africa to Eurasia ∼250 kya, and subsequent admixture with Neanderthals, resulting in ∼6% AMH ancestry in Neanderthals. These results indicate that there have been multiple migration events of AMHs out of Africa and that Neanderthal and AMH gene flow has been bi-directional.

Observing that genomic regions where AMHs show a depletion of Neanderthal introgression are also regions where Neanderthal genomes show a depletion of AMH introgression points to deleterious interactions between introgressed variants and background genomes in both groups—a hallmark of incipient speciation.

Daniel N. Harris, et al., "Diverse African genomes reveal selection on ancient modern human introgressions in Neanderthals" Current Biology (October 13, 2023). DOI:https://doi.org/10.1016/j.cub.2023.09.066

[T]hey compared the modern human genomes to a genome belonging to a Neanderthal who lived approximately 120,000 years ago. For this comparison, the team developed a novel statistical method that allowed them to determine the origins of the Neanderthal-like DNA in these modern sub-Saharan populations, whether they were regions that Neanderthals inherited from modern humans or regions that modern humans inherited from Neanderthals and then brought back to Africa.They found that all of the sub-Saharan populations contained Neanderthal-like DNA, indicating that this phenomenon is widespread. In most cases, this Neanderthal-like DNA originated from an ancient lineage of modern humans that passed their DNA on to Neanderthals when they migrated from Africa to Eurasia around 250,000 years ago. As a result of this modern human-Neanderthal interbreeding, approximately 6% of the Neanderthal genome was inherited from modern humans.In some specific sub-Saharan populations, the researchers also found evidence of Neanderthal ancestry that was introduced to these populations when humans bearing Neanderthal genes migrated back into Africa. Neanderthal ancestry in these sub-Saharan populations ranged from 0 to 1.5%, and the highest levels were observed in the Amhara from Ethiopia and Fulani from Cameroon.

Monday, October 9, 2023

Columbus Day Considered

Christopher Columbus was a pretty horrible person, so I have no problem ceasing to honor him with a national holiday. But he was also a uniquely important historical person whom everyone should know about, and the well attested date of his arrival, in 1492 CE, should also be something that every educated person knows.

Columbus was not the first person to make contact with the Americas after the arrival and dispersal of the predominant Founding populations of the indigenous people of South America, Mesoamerica, and most of North America around 14,000 years ago from Beringia where these populations had an extended sojourn in their migration to the Americas from Northeast Asia. They arrived with dogs, but no other domesticated animals.

There was at least one small progenitor wave of modern humans that reached at least as far as New Mexico before them about 21,000 or more years ago (as recent research has confirmed this year from earlier less definitive data), but unlike the Founding population of the Americas (itself derived from a genetically homogeneous population of less than a thousand Beringians), they left few traces and had little, if any, demographic impact on the Founding population that came after them, or had only the most minimal, if any, ecological impact on the Americas.

Columbus also arrived after the later arrival of two waves of migration from Siberia, one of which brought the ancestors of the Na-Dene people during what was the Bronze Age in Europe, and the second of which brought the ancestors of the Inuits during the European Middle Ages, both of whom were mostly limited to the Arctic and sub-Arctic regions of North America, except for a Na-Dene migration to what is now the American Southwest around 1000 CE plus or minus (in part from pressure to move caused by the ancestors of the Inuit people). The Na-Dene ultimately heavily integrated themselves into the local population descended from the Founding population of the Americas. The Inuits admixed some, but much less, with the pre-existing population of the North American Arctic and largely replaced a population of "Paleo-Eskimos" who derived from the same wave of ancestors as the Na-Dene in the North American Arctic.

A few villages of Vikings settled for a generation or two in what is now Eastern Canada ca. 1000 CE before dying out or leaving (and leaving almost no genetic trace in people who continued to live in the Americas). And, the Polynesians (and perhaps others) probably made contact with the Pacific Coast of South America and Central America several times in the time frame (generously estimated) of ca. 3000 BCE to 1500 CE, again without a huge impact on the Americas or the rest of the world and with only slight amounts of admixture.

But the contact that Christopher Columbus made with the Americas persisted and profoundly changed both the Americas and the rest of the world. He returned to Europe and then came back with more Europeans who aimed to conquer the New World (including many covert Sephardic Jews seeking a more hospitable future than they had in Iberia).

Christopher Columbus's first contact led to a chain of events that resulted in the deaths of perhaps 90% of the indigenous population of the Americas (mostly due to the spread of European diseases like small pox and E. Coli, to which the people of the Americas had no immunity, at a time prior to the germ theory of disease, and only secondarily due to more concerted hostile actions taken by the European colonists towards the indigenous populations), led to the collapse of the Aztec and Inca civilizations at the hands of Spanish Conquistadors, brought syphilis to the Old World (especially Europe), brought horses to the Americas, led to the deforestation of much of North America, and brought crops native to the Americas including potatoes, maize, tomatoes, and hot chilis to the Old World (both Europe and Asia). Indirectly, this chain of events even led fairly directly to the Irish potato famine more than three centuries later.

Tuesday, October 3, 2023

Linguistic Terminology

One of the big issues in classification, mostly a terminology question, is where to draw the line between two ways of speaking and writing being dialects v. different languages, in part, because it often has political connotations.

"language" has two meanings: a generalized, abstract sense that comprises all human speech and writing, and the officially recognized speech and writing of a nation / country / gens — a politically united group of people.A topolect is the speech / writing of the people living in a certain place or area. It is geographically determined.A dialect is a distinctive form / style / pronunciation / accent shared by two or more people. To qualify as the speaker of a particular dialect, one must possess a pattern of speech, a lect, that is intelligible to others who speak the same dialect. As we say in Mandarin, it's a question of whether what you speak is jiǎng dé tōng 講得通 ("mutually intelligible") or jiǎng bùtōng 講不通 ("mutually unintelligible"). If what two people are speaking is jiǎng bùtōng 講不通 ("mutually unintelligible"), then they're not speaking the same dialect.Naturally, the dividing line between one dialect and another is not sharp. There is a blending, a gradation, a blurring between them. The same if true of languages on a larger scale. For example, I can understand close to a 100% of the speech of natives of Stark County, Ohio, but maybe only 75-80% of rapid speech from Vinton County in the south.An idiolect is spoken by only one person. . . ."Dialect" means so many radically different things, but also so many things that somewhat resemble each other, but are not really the same, that it is essentially useless for scientific purposes.

Antimatter Falls Down

This shouldn't be a surprise to anyone, but experiments have now confirmed, at the ALPHA Experiment at CERN, that anti-matter does indeed fall down, which is to say that its mass-energy has the same gravitational "charge" as all other mass-energy, rather than "falling up" and having a repulsive gravitational reaction to ordinary mass-energy. A link to the peer reviewed paper in Nature, and it abstract can be found here. The abstract states:

Einstein’s general theory of relativity (GR), from 1915, remains the most successful description of gravitation. From the 1919 solar eclipse to the observation of gravitational waves, the theory has passed many crucial experimental tests. However, the evolving concepts of dark matter and dark energy illustrate that there is much to be learned about the gravitating content of the universe. Singularities in the GR theory and the lack of a quantum theory of gravity suggest that our picture is incomplete. It is thus prudent to explore gravity in exotic physical systems. Antimatter was unknown to Einstein in 1915. Dirac’s theory appeared in 1928; the positron was observed in 1932. There has since been much speculation about gravity and antimatter. The theoretical consensus is that any laboratory mass must be attracted by the Earth, although some authors have considered the cosmological consequences if antimatter should be repelled by matter. In GR, the Weak Equivalence Principle (WEP) requires that all masses react identically to gravity, independent of their internal structure. Here we show that antihydrogen atoms, released from magnetic confinement in the ALPHA-g apparatus, behave in a way consistent with gravitational attraction to the Earth. Repulsive ‘antigravity’ is ruled out in this case. This experiment paves the way for precision studies of the magnitude of the gravitational acceleration between anti-atoms and the Earth to test the WEP.

The WEP has recently been tested for matter in Earth orbit with a precision of order 10-15. Antimatter has hitherto resisted direct, ballistic tests of the WEP due to the lack of a stable, electrically neutral, test particle. Electromagnetic forces on charged antiparticles make direct measurements in the Earth’s gravitational field extremely challenging . The gravitational force on a proton at the Earth’s surface is equivalent to that from an electric field of about 10-7 Vm-1. The situation with magnetic fields is even more dire: a cryogenic antiproton at 10 K would experience gravity-level forces in a magnetic field of order 10-10 T. Controlling stray fields to this level to unmask gravity is daunting. Experiments have, however, shown that confined, oscillating, charged antimatter particles behave as expected when considered as clocks in a gravitational field. The abilities to produce and confine antihydrogen now allow us to employ stable, neutral anti-atoms in dynamic experiments where gravity should play a role. Early considerations and a more recent proof-of-principle experiment in 2013 illustrated this potential. We describe here the initial results of a purpose-built experiment designed to observe the direction and the magnitude of the gravitational force on neutral antimatter.

This Year's Nobel Prize In Physics

The 2023 Nobel Prize in physics has been awarded to a team of scientists who created a ground-breaking technique using lasers to understand the extremely rapid movements of electrons, which were previously thought impossible to follow.Pierre Agostini, Ferenc Krausz and Anne L’Huillier “demonstrated a way to create extremely short pulses of light that can be used to measure the rapid processes in which electrons move or change energy,” the Nobel committee said when the prize was announced in Stockholm on Tuesday.