The general theory of relativity (GR) was proposed with an aim of incorporating Mach's principle mathematically. Despite early hopes, it became evident that GR did not follow Mach's principle. Over time, multiple researchers attempted to develop gravity theories aligned with Machian idea. Although these theories successfully explained various aspects of Mach's principle, each of these theories possessed its own strengths and weaknesses.

In this paper, we discuss some of these theories and then try to combine these theories into a single framework that can fully embrace Mach's principle. This new theory, termed Machian Gravity (MG) is a metric-based theory, and can be derived from the action principle, ensuring compliance with all conservation laws. The theory converges to GR at solar system scales, but at larger scales, it diverges from GR and aligns with various modified gravity models proposed to explain dark sectors of the Universe.

We have tested our theory against multiple observational data. It explains the galactic rotation curve without requiring additional dark matter (DM). The theory also resolves the discrepancy between dynamic mass and photometric mass in galaxy clusters without resorting to DM, but it introduces two additional parameters. It can also explain the expansion history of the Universe without requiring dark components.

Newtonian gravity can provide a very accurate description of gravity, provided the gravitational field is weak, not time-varying and the concerned velocities are much less than the speed of light. It can accurately describe the motions of planets and satellites in the solar system. Einstein formulated GR to provide a complete geometric approach to gravity. GR is designed to follow Newtonian gravity at a large scale. It can explain the perihelion precession of Mercury’s orbit and the bending of light by the Sun, which were never realized before, using Newtonian mechanics. Over the years, numerous predictions of GR, such as the existence of black holes, gravitational waves, etc. have been observed. This makes GR one of the most well-accepted theories of gravity.However, the drawbacks of GR come to light when GR is applied on the galactic and cosmological scale. It fails to produce the galactic velocity profiles, provided that calculations are made just considering the visible matter in the galaxy. This led researchers to postulate a new form of weakly interacting matter named dark matter. Earlier it was commonly believed that dark matter (DM) is made up of particles predicted from supersymmetry theory. However, the lack of evidence of these particles from Large Hadron Collider (LHC) strengthens the proposition of other candidates, such as Axions, ultra-light scalar field dark matter, etc.

A further mysterious puzzle is the dark energy (DE) because that requires to produce a repulsive gravitation force. Cosmological constant or Λ-term provides an excellent solution for this. However, as the observations become more precise, multiple inconsistencies come to light.There can be two ways to solve the dark sector of the Universe.

Firstly, we can assume that there is in need some type of matter that does not interact with standard-model particles and acts as dark matter, and we have some form of energy with a negative pressure and provide a dark-energy-like behavior.

While this can, in need, be the case, the possibility that the GR fails to explain the true nature of gravity in kilo-parsec scale can also not be overlooked. In such a case, we need an alternate theory of gravity that can replicate GR on a relatively smaller scale while deviating from it on a galactic scale.Several theories have been proposed in the last decade to explain DM and DE. Empirical theories like Modified Newtonian Dynamics (MOND) can explain the galactic velocity profiles extremely well but violates momentum conservation principles. Therefore, if a mathematically sound theory is developed that can mimic the MOND empirically, then that can explain the dark matter. Bekenstein proposed AQUAdraticLagrangian (AQUAL) to provide a physical ground to MOND. Other theories, such as Modified gravity, Scalar-Tensor-VectorGravity (STVG),Tensor–Vector–Scalar gravity (TeVeS), Massive gravity etc. are also proposed to match the galactic velocity profiles without dark matter. Other higher dimensional theories such as induced matter theory etc. are also proposed by researchers. However, all these theories came from the natural desire to explain the observational data and not build on a solid logical footing.Now, let us shift our focus to another aspect of GR. In the early 20th century, Earnest Mach hypothesized that the inertial properties of matter must depend on the distant matters of the Universe. Einstein was intrigued by Mach’s Principle and tried to provide a mathematical construct of it through the GR. He later realized that his field equations imply that a test particle in an otherwise empty Universe has inertial properties, which contradicts Mach’s argument. However, intrigued by the overwhelming success of GR in explaining different observational data, he did not make any further attempt to explain Mach’s principle.In view of this, it is worthwhile searching for a theory that implies that matter has inertia only in the presence of other matter. Several theories that abide by Mach’s principle have been postulated in the last century. Among these, the most prominent are Sciama’s vector potential theory, Brans Dicke (BD) theory or the scalar-tensor theory of gravity and Hoyle Narlikar theory etc. Although each of these theories addresses certain aspects of Mach’s principle as discussed in the respective articles, none offers a complete explanation. Thus, only a unified theory that combines these approaches could provide a comprehensive understanding of Mach’s principle in its entirety.In this article, we address all the issues described above and propose a theory of gravity based on Mach’s principle. It is based on the following premises.

• Action principle: The theory should be derived from an action principle to guarantee that the theory does not violate conservation laws.• Equivalence principle : Various research groups have tested the Weak Equivalence Principle (WEP) at an exquisite procession. Therefore, any theory must follow the weak equivalence principle. However, the strong equivalence principle has not been tested on a large scale. If the ratio of the inertial mass and the gravitational mass changes over space-time (on a galactic scale or cosmological scale), then that does not violate results from our local measurements. In accordance with Mach’s principle, the inertial properties of matter come from all the distant matter of the Universe. As the matter distribution at different parts of the Universe is different, the theory may not follow the strong equivalence principle.• Departure from GR: As GR provides an excellent result in the solar system scale, the proposed theory should follow GR on that scale, and it only deviates from GR at the galactic scale to mimic some of the modified gravity theories proposed by researchers to explain the dark sectors of the Universe. Along with this, the proposed theory should also be able to replicate the behavior of theories like Sciama’s theory or BD theory under the specific circumstances for which they were proposed.

The paper is organized as follows.

In the second section I briefly discuss previous developments in gravity theory to explain Mach’s principle. Most of the points covered in this section are generally known positions form various previous research. However, since Mach’s principle is not a mainstream area of study, this discussion is necessary and important for understanding the new insights presented in this article. In some cases I interpret these established ideas from the perspective of this paper, that will help me to built the gravity theory in the later section.

In the next section, we explain Mach’s principle and discuss the mathematical tools used to formulate the theory. We present the source-free field equations for the theory in the same section.

The static spherically symmetric solution for the theory in weak field approximation is presented in the fourth section. We show that the solution follows Newtonian gravity and GR at a smaller scale but deviates from it at a large scale.

Section five presents examples of galactic rotation curves and galaxy cluster mass distributions, demonstrating that the theory yields results in close agreement with observations.

The source term of the theory has been described in the 6th section.

In the next section, we provide the cosmological solutions to the MG model.

The final section is the conclusion and discussion section. We have also added five appendices where we describe the nitty-gritty of the calculations and add multiple illustrations.

This theory is formulated in a 4+1 dimensional space-time. It states that "The momentum in the fifth dimension represents the inertial mass of the particle, which remains constant in any local region." The paper could be more clear regarding which two additional parameters are added to the theory, but appear to be related to a vector field and a scalar field, respectively:

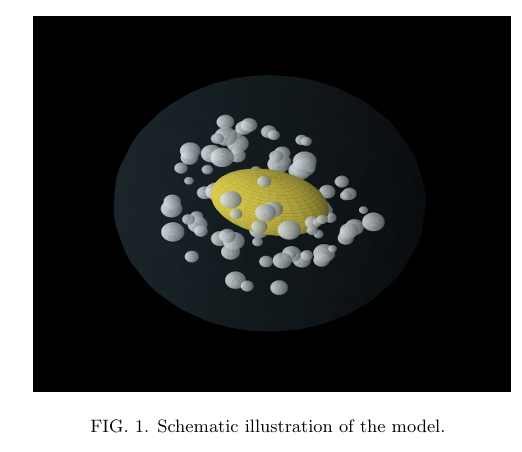

The proposed framework is built upon a five-dimensional metric involving three essential elements: a scalar field ϕ, a vector field Aµ, and an extra dimension x4. (note that these two fields behave as scalar and vector field only if the metric is independent of x4.)

Side commentary on dark matter particle theories