We present a measurement of the τ-lepton mass using a sample of about 175 million e+e−→τ+τ− events collected with the Belle II detector at the SuperKEKB e+e− collider at a center-of-mass energy of 10.579GeV. This sample corresponds to an integrated luminosity of 190fb^−1.We use the kinematic edge of the τ pseudomass distribution in the decay τ−→π−π+π−ντ and measure the τ mass to be 1777.09±0.08±0.11MeV/c^2, where the first uncertainty is statistical and the second systematic. This result is the most precise to date.

Wednesday, May 31, 2023

Belle II Makes A New Tau Lepton Mass Measurement

Tuesday, May 30, 2023

Evidence Of Another Predicted Higgs Boson Decay Channel

[C]ollaborative effort [by the ATLAS and CMS experiments at the Large Hadron Collider (LHC) has] resulted in the first evidence of the Higgs boson decay into a Z boson and a photon. The result has a statistical significance of 3.4 standard deviations, which is below the conventional requirement of 5 standard deviations to claim an observation. The measured signal rate is 1.9 standard deviations above the Standard Model prediction.

From here.

Thus, there is strong, but not conclusive evidence of this predicted decay channel of the Higgs boson at a strength consistent with the Standard Model prediction.

This updates results most recently discussed here. This post noted in the pertinent part:

What do we know about how strong the fit of what we observe to the SM Higgs is?Scientists haven't established beyond all doubt that the Higgs boson we have seen is the SM Higgs boson yet, but every few months since it has been discovered the constraints on differences from the SM Higgs have gotten smaller and more restricted. The data has also ruled out many hypotheses for additional Higgs bosons.There is basically no data that is contrary to the predictions of the SM Higgs hypothesis made about 50 years ago (subject to determining its mass), and for a given Higgs boson mass the properties of the SM Higgs boson are completely predetermined with no wiggle room at all down to parts per ten million or better.The global average value for the mass of the Higgs boson is currently 125.25±0.17 GeV, a relative accuracy of about 1.4 parts per thousand.There is also basically no data strongly suggesting one or more additional BSM Higgs bosons (although there is a bit of an anomaly at 96 GeV), even though BSM Higgs bosons aren't directly ruled out yet above the hundreds of GeVs. BSM Higgs bosons are also allowed in pockets of allowed parameter spaces at lower masses if the properties of the hypothetical particles are just right. For example, new Higgs bosons with a charge of ± 2 are ruled out at masses up to about 900 GeV, and so are many other heavy Higgs boson hypotheses. Indirect constraints also greatly limit the parameter space of BSM Higgs bosons unless they have precisely the right properties (which turn out to be not intuitively plausible or well-motivated theoretically).The data strongly favor the characterization of the observed Higgs boson as a spin-0 particle, just like the SM Higgs boson, and strongly disfavors any other value of spin for it.The data is fully consistent at the 0.6 sigma level with an even parity SM Higgs boson, see here, while the pure CP-odd Higgs boson hypothesis is disfavored at a level of 3.4 standard deviations. In other words, the likelihood that the Higgs boson is not pure CP-odd is about 99.9663%.A mix of a CP-odd Higgs boson and a CP-even Higgs boson of the same mass is (of course) harder to rule out as strongly, particularly if the mix is not equal somehow and the actual mix is more CP-even than CP-odd. There isn't a lot of precedent for those kinds of uneven mixings, however, in hadron physics (i.e., the physics of composite QCD bound particles), for example.Eight of the nine Higgs boson decay channels theoretically predicted to be most common in a SM Higgs of about 125 GeV have been detected. Those channels, ranked by branching fraction are:b-quark pairs, 57.7% (observed)W boson pairs, 21.5% (observed)gluon pairs, 8.57%tau-lepton pairs, 6.27% (observed)c-quark pairs, 2.89% (observed May 2022)Z boson pairs, 2.62% (observed)photon pairs, 0.227% (observed)Z boson and a photon, 0.153% (observed April 2022)muon pairs, 0.021 8% (observed)electron-positron pairs, 0.000 000 5%All predicted Higgs boson decay channels, except gluon pairs, with a branching fraction of one part per 5000 or more have been detected.Decays to gluon pairs are much harder to discern because the hadrons they form as they "decay" are hard to distinguish from other background processes that give rise to similar hadrons to those from gluon pairs at high frequencies. Even figuring out what the gluon pair decays should look like theoretically due to QCD physics, so that the observations from colliders can be compared to this prediction, is very challenging.The total adds 99.9518005% rather than to 100% due to rounding errors, and due to omitted low probability decays including strange quark pairs (a bit less likely than muon pairs), down quark pairs (slightly more likely than electron-positron pairs), up quark pairs (slightly more likely than electron positron pairs), and asymmetric boson pairs other than Z-photon decays (also more rare than muon pairs).The Higgs boson doesn't decay to top quarks, but the measured top quark coupling is within 10% of the SM predicted value in a measurement with an 18% uncertainty at one sigma in one kind of measurement, and within 1.5 sigma of the predicted value using another less precise kind of measurement.The Particle Data Group summarizes the strength of some of the measured Higgs boson couplings relative to the predicted values for the measured Higgs boson mass, and each of these channels is a reasonably good fit relative to the measured uncertainty in its branching fraction.Combined Final States = 1.13±0.06W W∗= 1.19±0.12Z Z∗= 1.01±0.07γγ= 1.10±0.07bb= 0.98±0.12μ+μ−= 1.19±0.34τ+τ−= 1.15+0.16−0.15ttH0Production = 1.10±0.18tH0production = 6±4The PDG data cited above predates the cc decay and Zγ channel discovery made this past spring, so I've omitted those from the list above in favor of the data from the papers discovering the new channels.One of these papers shows that the branching fraction in the Zγ channel relative to the SM expectation is μ=2.4±0.9. The ratio of branching fractions B(H→Zγ)/B(H→γγ) is measured to be 1.5+0.7−0.6, which agrees with the standard model prediction of 0.69 ± 0.04 at the 1.5 standard deviation level.The branching fraction of the cc channel isn't very precisely known yet, but isn't more than 14 times the SM prediction at the 95% confidence level.The Higgs boson self-coupling is observationally constrained to be not more than about ten times stronger than the SM expected value, although it could be weaker than the SM predicted value. But the crude observations of its self-coupling are entirely consistent with the SM expected value so far. This isn't a very tight constraint, but it does rule out wild deviations from the SM paradigm.The width of the Higgs boson (equivalently, its mean lifetime) is consistent to the best possible measurements with the theoretical SM prediction for the measured mass. The full width Higgs boson width Γ is 3.2+2.8−2.2MeV, assuming equal on-shell and off-shell effective couplings (which is a quite weak assumption). The predicted value for a 125 GeV Higgs boson is about 4 MeV.There are really no well motivated hypotheses for a Higgs boson with properties different from the SM Higgs boson that could fit the observations to date this well.For a particle that has only been confirmed to exist for ten and a half years, that's a pretty good set of fits. And, the constraints on deviations from the SM Higgs boson's properties have grown at least a little tighter every year since its discovery announced on July 4, 2012.

Wednesday, May 24, 2023

Upper Paleolithic South African Lakes

[S]everal large bodies of water were sustained in the now arid South African interior during the last Ice Age, particularly 50,000-40,000 years ago, and again 31,000 years ago.

Significance

Renowned for its rich evidence pertaining to early Homo sapiens, South Africa’s late Pleistocene archaeological record remains biased toward the continental margins. A profusion of coastal rockshelters, together with putative associations of glacial periods with intense aridity in the continental interior, has created historical impressions of an inland core hostile to human life during lengthy glacial phases.

New geological data and large-scale hydrological modeling experiments reveal that a series of large, now-dry palaeolakes existed for protracted phases of marine isotope stages 3 and 2. Modern analog reconstructions of resulting vegetational and faunal communities suggest that glacial climates were capable of transforming South Africa’s central interior into a resource-rich landscape favorable to human populations.

Abstract

Determining the timing and drivers of Pleistocene hydrological change in the interior of South Africa is critical for testing hypotheses regarding the presence, dynamics, and resilience of human populations.

Combining geological data and physically based distributed hydrological modeling, we demonstrate the presence of large paleolakes in South Africa’s central interior during the last glacial period, and infer a regional-scale invigoration of hydrological networks, particularly during marine isotope stages 3 and 2, most notably 55 to 39 ka and 34 to 31 ka.

The resulting hydrological reconstructions further permit investigation of regional floral and fauna responses using a modern analog approach. These suggest that the climate change required to sustain these water bodies would have replaced xeric shrubland with more productive, eutrophic grassland or higher grass-cover vegetation, capable of supporting a substantial increase in ungulate diversity and biomass.

The existence of such resource-rich landscapes for protracted phases within the last glacial period likely exerted a recurrent draw on human societies, evidenced by extensive pan-side artifact assemblages. Thus, rather than representing a perennially uninhabited hinterland, the central interior’s underrepresentation in late Pleistocene archeological narratives likely reflects taphonomic biases stemming from a dearth of rockshelters and regional geomorphic controls.

These findings suggest that South Africa’s central interior experienced greater climatic, ecological, and cultural dynamism than previously appreciated and potential to host human populations whose archaeological signatures deserve systematic investigation.

The following illustration from the supplementary materials shows the status quo:

Monday, May 22, 2023

The Long Term Impacts Of Islamic Occupation In Iberia

We use a unique dataset on Muslim domination between 711-1492 and literacy in 1860 for about 7500 municipalities to study the long-run impact of Islam on human-capital in historical Spain.

Reduced-form estimates show a large and robust negative relationship between length of Muslim rule and literacy.

We argue that, contrary to local arrangements set up by Christians, Islamic institutions discouraged the rise of the merchant class, blocking local forms of self-government and thereby persistently hindering demand for education. Indeed, results show that a longer Muslim domination in Spain is negatively related to the share of merchants, whereas neither later episodes of trade nor differences in jurisdictions and different stages of the Reconquista affect our main results. Consistent with our interpretation, panel estimates show that cities under Muslim rule missed-out on the critical juncture to establish self-government institutions.

Wednesday, May 17, 2023

Two Subspecies of Ancestral African Humans Merged To Form Modern Humans

A new paper in Nature argues that genetic signals that have previously been interpreted as signs of archaic admixture in modern humans in Africa, actually reflect a process in which modern humans arose from two distinct gene pools in Africa of sub-species of ancestral modern humans that had a low, but non-zero level of interbreeding between them for hundreds of thousands of years.

There is a more educated layman oriented description of the paper's findings at the New York Times (paywalled), which is somewhat better than average for NYT science coverage.

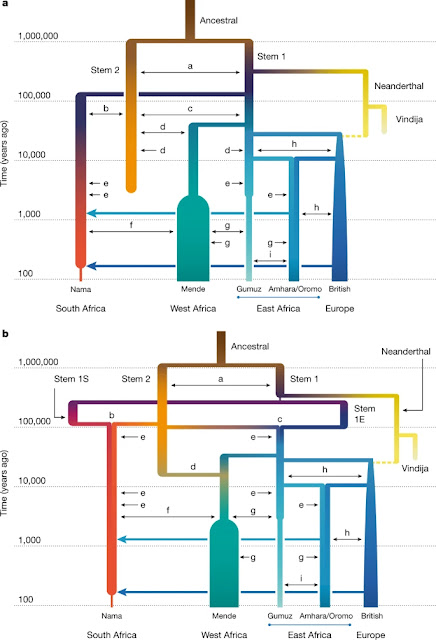

a,b, In the two best-fitting parameterizations of early population structure, continuous migration (a) and multiple mergers (b), models that include ongoing migration between stem populations outperform those in which stem populations are isolated. Most of the recent populations are also connected by continuous, reciprocal migration that is indicated by double-headed arrows. These migrations last for the duration of the coexistence of contemporaneous populations with constant migration rates over those intervals. The merger-with-stem-migration model (b, with LL = −101,600) outperformed the continuous-migration model (a, with LL = −115,300). Colours are used to distinguish overlapping branches. The letters a–i represent continuous migration between pairs of populations.

Despite broad agreement that Homo sapiens originated in Africa, considerable uncertainty surrounds specific models of divergence and migration across the continent. Progress is hampered by a shortage of fossil and genomic data, as well as variability in previous estimates of divergence times.

Here we seek to discriminate among such models by considering linkage disequilibrium and diversity-based statistics, optimized for rapid, complex demographic inference. We infer detailed demographic models for populations across Africa, including eastern and western representatives, and newly sequenced whole genomes from 44 Nama (Khoe-San) individuals from southern Africa.

We infer a reticulated African population history in which present-day population structure dates back to Marine Isotope Stage 5. The earliest population divergence among contemporary populations occurred 120,000 to 135,000 years ago and was preceded by links between two or more weakly differentiated ancestral Homo populations connected by gene flow over hundreds of thousands of years. Such weakly structured stem models explain patterns of polymorphism that had previously been attributed to contributions from archaic hominins in Africa.

In contrast to models with archaic introgression, we predict that fossil remains from coexisting ancestral populations should be genetically and morphologically similar, and that only an inferred 1–4% of genetic differentiation among contemporary human populations can be attributed to genetic drift between stem populations.

We show that model misspecification explains the variation in previous estimates of divergence times, and argue that studying a range of models is key to making robust inferences about deep history.

Our inferred models paint a consistent picture of the Middle to Late Pleistocene as a critical period of change, assuming that estimates from the recombination clock accurately relate to geological chronologies.

During the late Middle Pleistocene, the multiple-merger model indicates three major stem lineages in Africa, tentatively assigned to southern (stem 1S), eastern (stem 1E) and western/central Africa (stem 2). Geographical association was informed by the present population location with the greatest ancestry contribution from each stem. For example, stem 1S contributes 70% to the ancestral formation of the Khoe-San. The extent of the isolation 400 ka between stem 1S, stem 1E and stem 2 suggests that these stems were not proximate to each other.

Although the length of isolation among the stems is variable across fits, models with a period of divergence, isolation and then a merger event (that is, a reticulation) out-performed models with bifurcating divergence and continuous gene flow.A population reticulation involves multiple stems that contribute genetically to the formation of a group. One way in which this can happen is through the geographical expansion of one or both stems. For example, if, during MIS 5, either stem 1S from southern Africa moved northwards and thus encountered stem 2, or stem 2 moved from central–western Africa southwards into stem 1S, then we could observe disproportionate ancestry contributions from different stems in contemporary groups.

We observed two merger events. The first, between stem 1S and stem 2, resulted in the formation of an ancestral Khoe-San population around 120 ka. The second event, between stem 1E and stem 2 about 100 ka, resulted in the formation of the ancestors of eastern and western Africans, including the ancestors of people outside Africa.

Reticulated models do not have a unique and well-defined basal human population divergence. We suggest conceptualizing the events at 120 ka as the time of most recent shared ancestry among sampled populations. However, interpreting population divergence times in population genetics is always difficult, owing to the co-estimation of divergence time and subsequent migration; methods assuming clean and reticulated splits can infer different split dates. Therefore, in the literature, wide variation exists in estimates of divergence time.Shifts in wet and dry conditions across the African continent between 140 ka and 100 ka may have promoted these merger events between divergent stems. Precipitation does not neatly track interglacial cycles in Africa, and heterogeneity across regions may mean that the beginning of an arid period in eastern Africa is conversely the start of a wet period in southern Africa. The rapid rise in sea levels during the MIS 5e interglacial might have triggered migration inland away from the coasts, as has been suggested, for example, for the palaeo-Agulhas plain.

After these merger events, the stems subsequently fractured into subpopulations which persisted over the past 120 ka. These subpopulations can be linked to contemporary groups despite subsequent gene flow across the continent. For example, a genetic lineage sampled in the Gumuz has a probability of 0.7 of being inherited from the ancestral eastern subpopulation 55 ka, compared with a probability of 0.06 of being inherited from the southern subpopulation.We also find that stem 2 continued to contribute to western Africans during the Last Glacial Maximum (26 ka to 20 ka), indicating that this gene flow probably occurred in western and/or central Africa. Such an interpretation is reinforced by differential migration rates between regions; that is, the gene flow from stem 2 to western Africans is estimated to be five times that of the rate to eastern Africans during this period.

Biodiversity By Organism Size And Aggregate Biomass On Earth

Life on Earth is disproportionately made up of tiny organisms and large organisms, as measured by the aggregate biomass of organisms of each size.

A. Median carbon biomass (log scale) per log size as a function of body size with 95% confidence bounds (black dotted curves) cumulated across biological groups from 1000 bootstraps over within-group biomass and body size error distributions. Groups were organized from the least massive at the bottom to the most massive at the top for visibility on the log scale (ordered from top left to bottom right in color legend for group identity). Group biomasses are stacked so each group’s biomass is represented by its upper y-axis location minus its lower y-axis location (not by the upper y-axis location alone).

B. Median biomass in linear biomass scale. Confidence bounds are not shown here because they are so large as to obscure the median patterns on the linear scale.

Recent research has revealed the diversity and biomass of life across ecosystems, but how that biomass is distributed across body sizes of all living things remains unclear. We compile the present-day global body size-biomass spectra for the terrestrial, marine, and subterranean realms. To achieve this compilation, we pair existing and updated biomass estimates with previously uncatalogued body size ranges across all free-living biological groups. These data show that many biological groups share similar ranges of body sizes, and no single group dominates size ranges where cumulative biomass is highest. We then propagate biomass and size uncertainties and provide statistical descriptions of body size-biomass spectra across and within major habitat realms.

Power laws show exponentially decreasing abundance (exponent -0.9±0.02 S.D., R^2 = 0.97) and nearly equal biomass (exponent 0.09±0.01, R^2 = 0.56) across log size bins, which resemble previous aquatic size spectra results but with greater organismal inclusivity and global coverage. In contrast, a bimodal Gaussian mixture model describes the biomass pattern better (R2 = 0.86) and suggests small (~10^−15 g) and large (~10^7 g) organisms outweigh other sizes by one order magnitude (15 and 65 Gt versus ~1 Gt per log size). The results suggest that the global body size-biomass relationships is bimodal, but substantial one-to-two orders-of-magnitude uncertainty mean that additional data will be needed to clarify whether global-scale universal constraints or local forces shape these patterns.

Tuesday, May 16, 2023

Were There Recent Waves Of Brain Evolution In Humans?

John Hawks looks at a recent study of recent genetic mutations in humans linked to their surmised functions.

In theory, if human evolution make some key breakthroughs at times associated archaeologically with inferred timing of the appearance of new genes dealing with relevant functions to those developments, we could tell a story of genetic mutations leading to the dramatic success of our species. But, due to methodological issues and the weakness of the evidence, he comes down solidly in the agnostic camp at this point about this kind of narrative.

Arecibo 2.0

The Next Generation Arecibo Telescope (NGAT) was a concept presented in a white paper Roshi et al. (2021) developed by members of the Arecibo staff and user community immediately after the collapse of the 305 m legacy telescope.

A phased array of small parabolic antennas placed on a tiltable plate-like structure forms the basis of the NGAT concept. The phased array would function both as a transmitter and as a receiver. This envisioned state of the art instrument would offer capabilities for three research fields, viz. radio astronomy, planetary and space & atmospheric sciences. The proposed structure could be a single plate or a set of closely spaced segments, and in either case it would have an equivalent collecting area of a parabolic dish of size 300 m. In this study we investigate the feasibility of realizing the structure.

Our analysis shows that, although a single structure ~300 m in size is achievable, a scientifically competitive instrument 130 to 175 m in size can be developed in a more cost effective manner. We then present an antenna configuration consisting of one hundred and two 13 m diameter dishes. The diameter of an equivalent collecting area single dish would be ~130 m, and the size of the structure would be ~146 m. The weight of the structure is estimated to be 4300 tons which would be 53% of the weight of the Green Bank Telescope.

We refer to this configuration as NGAT-130. We present the performance of the NGAT-130 and show that it surpasses all other radar and single dish facilities. Finally, we briefly discuss its competitiveness for radio astronomy, planetary and space & atmospheric science applications.

Wednesday, May 10, 2023

Is Dark Energy Decreasing?

The potential energy from a time-dependent scalar field provides a possible explanation for the observed cosmic acceleration. In this paper, we investigate how the redshift vs brightness data from the recent Pantheon+ survey of type Ia supernovae constrain the possible evolution of a single scalar field for the period of time (roughly half the age of the universe) over which supernova data are available.

Taking a linear approximation to the potential, we find that models providing a good fit to the data typically have a decreasing potential energy at present (accounting for over 99% of the allowed parameter space) with a significant variation in scalar potential (⟨Range(V)/V(0)⟩≈0.97) over the period of time corresponding to the available data (z<2.3). Including quadratic terms in the potential, the data can be fit well for a wide range of possible potentials including those with positive or negative V2 of large magnitude, and models where the universe has already stopped accelerating.

We describe a few degeneracies and approximate degeneracies in the model that help explain the somewhat surprising range of allowed potentials.

One main conclusion is that there is a lot of room for such models, and models that are consistent with observations are not necessarily close to ΛCDM. Within the space of models we consider, we have found that in models providing a good fit to the data, the scalar potential typically changes by an order one amount compared to its present value during the time scale corresponding to the supernova data, roughly half the age of the universe. In the context of models with a linear potential, a large majority of the models (> 99%) in the distribution defined by the exp(−χ^2/2) likelihood have a potential that is presently decreasing with time.

This strong preference for decreasing dark energy is somewhat surprising; one might have expected that if ΛCDM is actually the correct model, extending the parameter space to the linear potential models would yield a distribution with a similar fraction of models with V1 < 0 and V1 > 0. The dominance of V1 > 0 models in our distribution could thus be a hint that the correct model is not ΛCDM but one with a decreasing dark energy.

For models with a quadratic potential, we find a variety of qualitatively different possibilities, including models where the scalar field is now descending a downward-pointing parabola and models where the scalar is oscillating in an upward-facing parabola. Again, the best fit model has a decreasing dark energy at late times.

Tuesday, May 9, 2023

A Nice Example Of A Successful SM Prediction

Evidence That Wide Binary Stars Are MOND-Like

A gravitational anomaly is found at weak gravitational acceleration g(N)<10^−9 m s^−2 from analyses of the dynamics of wide binary stars selected from the Gaia EDR3 database that have accurate distances, proper motions, and reliably inferred stellar masses.

Implicit high-order multiplicities are required and the multiplicity fraction is calibrated so that binary internal motions agree statistically with Newtonian dynamics at a high enough acceleration of 10^−8 m s^−2. The observed sky-projected motions and separation are deprojected to the three-dimensional relative velocity v and separation r through a Monte Carlo method, and a statistical relation between the Newtonian acceleration g(N)≡GM/r2 (where M is the total mass of the binary system) and a kinematic acceleration g≡v^2/r is compared with the corresponding relation predicted by Newtonian dynamics.

The empirical acceleration relation at <10^−9 m s^−2 systematically deviates from the Newtonian expectation. A gravitational anomaly parameter δ(obs−newt) between the observed acceleration at g(N) and the Newtonian prediction is measured to be: δ(obs−newt)=0.034±0.007 and 0.109±0.013 at g(N)≈10^−8.91 and 10^−10.15 m s^−2, from the main sample of 26,615 wide binaries within 200 pc. These two deviations in the same direction represent a 10σ significance.

The deviation represents a direct evidence for the breakdown of standard gravity at weak acceleration. At g(N)=10^−10.15 m s^−2, the observed to Newton predicted acceleration ratio is g(obs)/g(pred)=10^(2√δ(obs−newt))=1.43±0.06. This systematic deviation agrees with the boost factor that the AQUAL theory predicts for kinematic accelerations in circular orbits under the Galactic external field.

Thursday, May 4, 2023

Measuring CKM Matrix Parameters In A Novel Way

During the last three decades the determination of the Unitarity Triangle (UT) was dominated by the measurements of its sides R(b) and R(t) through tree-level B decays and the ΔM(d)/ΔM(s) ratio, respectively, with some participation of the measurements of the angle β through the mixing induced CP-asymmetries like S(ψK(S)) and ε(K).

However, as pointed out already in 2002 by Fabrizio Parodi, Achille Stocchi and the present author, the most efficient strategy for a precise determination of the apex of the UT, that is (ϱ¯,η¯), is to use the measurements of the angles β and γ. The second best strategy would be the measurements of R(b) and γ. However, in view of the tensions between different determinations of |V(ub)| and |V(cb)|, that enter R(b), the (β,γ) strategy should be a clear winner once LHCb and Belle II will improve the measurements of these two angles.

In this note we recall our finding of 2002 which should be finally realized in this decade through precise measurements of both angles by these collaborations. In this context we present two very simple formulae for ϱ¯ and η¯ in terms of β and γ which could be derived by high-school students, but to my knowledge never appeared in the literature on the UT, not even in our 2002 paper.

We also emphasize the importance of precise measurements of both angles that would allow to perform powerful tests of the SM through numerous |V(cb)|-independent correlations between K and B decay branching ratios R(i)(β,γ) recently derived by Elena Venturini and the present author. The simple findings presented here will appear in a subsection of a much longer contribution to the proceedings of KM50 later this year. I exhibited them here so that they are not lost in the latter.

DM Annihilation Signals Greatly Constrained

The main result of the paper is that only DM annihilating into μ+μ− with a mass around 500 GeV and ⟨σv⟩=4×10^−24 cm^3/s can fit AMS-02 data and be compatible with the upper limits found with γ rays.

As for the τ+τ− (bb¯) channel, DM can contribute at most at a few tens % (a few %) level.

A Nightmare Scenario Physics Agenda

The "nightmare scenario" in high energy physics is one in which no beyond the Standard Model high energy fundamental physics are discovered, at least in a "desert" of energy scales from the TeV energy scale that the can be reached at the Large Hadron Collider (LHC) up to somewhere close to the Grand Unification Theory (GUT) scale of 10^13 TeV.

There is still work to be done for high energy physicists if you assume that this scenario is correct.

Pin down the experimentally measured parameters of the Standard Model

The Standard Model contains 15 particle masses, 4 CKM matrix parameters (in this nine element matrix) which govern the probability of a quark transforming into another quark via a W boson mediated interaction, 4 PMNS matrix parameters (in this nine element matrix) which governs the probability of a neutrino of one flavor oscillating into another flavor of neutrino, and 3 coupling constants for the three Standard Model forces (the strong force, the weak force, and the electromagnetic force). These 26 Standard Model parameters aren't entirely independent of each other due to electroweak unification theory relationships between the W boson mass, Z boson mass, the Higgs boson mass, weak force coupling constant, electromagnetic force coupling constant. So there are really only 24 or 25 independent degrees of freedom in the Standard Model's physical constants. In addition, the speed of light and Planck's constant, both of which are known to parts per billion precision, are experimentally determined values (the speed of light is now defined as an exact value but that defined value started with experimentally determined values), which are necessary to do Standard Model physics calculations.

These parameters are known to varying degrees of precision, with the up quark mass only known to ± 7% relative precision, to the electron mass and electromagnetic coupling constant that are known to exquisite precisions of parts per billion and parts per ten billion respectively. But there is always room for improvement in increasing the precision with which these parameters are known.

The most important to pin down more precisely from the perspective of a high energy physicist are: (1) the top quark mass and Higgs boson mass, which despite their high 0.2% and 0.1% respective relative precisions matter because their large absolute values mean small amounts of imprecision can have great practical impact, (2) the strong force coupling constant which is know to roughly 1% precision and plays a central role in every quantum chromodynamics (QCD), i.e. strong force, calculation, (3) the first generation quark masses, which are known to 5-7% precision which are present in the hadrons most commonly found in Nature, and (4) three of the four CKM matrix parameters which are known to 1-4% precision that are used very regularly and have theoretical importance. The tau lepton's mass is known to a precision of one part per 14,800, which while already considerable, is far less than that of the electron and muon, and is notable as a way to test Koide's rule which predicts it to high precision and has been confirmed so far.

Breakthroughs in the Higgs boson mass measurements precision are likely in the near future. Improvements in the other measurements are likely to be slower and incremental.

Meanwhile, all of the seven neutrino physics parameters need a great amount of improved measurement. The absolute neutrino masses can be estimated only of 16% to 100% of best fit value precision and only by relying on very indirect cosmology estimates, and the PMNS matrix parameters are known only to about 2-15% precision. Significant progress in these measurements is likely in a time frame of about a decade. Neutrino physicists are also continuing to search for neutrinoless double beta decay which would tell us something critical about the fundamental nature of neutrinos if it was observed. Improvements in these measurements by a factor of ten or so should either prove that it exists and with what frequency, or rule out the most straightforward theoretical ways for it to happen, and some progress is likely over the next decade or so. Knowing the absolute neutrino masses more precisely would allow for more precise predictions about how frequently neutrinoless double beta decay should be if it exists in some fairly simple model of it.

Progress in these measurements is also necessary to confirm that proposed explanations for the relationships between Standard Model physical constants are more than just coincidences facilitated by imprecision measurements.

Progress in neutrino physics measurements, meanwhile, have the potential to clarify possible mechanisms by which neutrino masses arise which the Standard Model with massive oscillating neutrinos only currently describes phenomenologically.

Looking For Predicted But Unobserved Phenomena

There are maybe a dozen or two ground state two valence quark mesons and three valence quark baryons that are predicted to exist, but to be rare and only form in fairly high energy collider environments, that have not yet been observed, although a few new hadrons which are predicted to exist seem to be detected every year. Dozens more, and theoretically infinite, numbers of excited hadrons likewise remain to be seen.

Free glueballs, i.e. hadrons made up of gluons without valence quarks, have not yet been observed, but are predicted to be possible in QCD.

Sphaleron interactions, in which baryon number (B) and lepton number (L) are not separately conserved even though B-L is conserved, are predicted to exist at energies about a hundred times greater than those of the LHC, but have not been observed.

A complete failure to find free glueballs or sphaleron interactions where they are theoretically predicted to be present would seem to require slight tweaks to the Standard Model.

There are observed true tetraquarks, pentaquarks, and maybe even hexaquarks, but far more are theoretically possible. We also have a dim understanding of hadron molecules made up of typical two valence quark mesons and three valence quark baryons bound by electromagnetism and the residual strong force, which can be tricky to distinguish from true tetraquarks, pentaquarks and hexaquarks.

The nice aspect of all of these searches is that theory provides a very focused target with very well defined properties to look for. In each of these cases, we know rather precisely the masses and other properties of the unobserved composite particles and the unobserved interaction, and the circumstances under which we can expect to have a chance of seeing them.

First Principles Calculations

Many quantities that, in principle, can be calculated from theory and the measured Standard Model parameters, are in practice determined by experimental measurements.

This includes all of the hadron properties, such as their masses, mean lifetimes, branching decay fractions, and "parton distribution functions" a.k.a. PDFs.

The residual strong force that binds protons and neutrons in atomic nuclei has been determined to fair precision in a manner informed by the Standard Model, but not worked out from first principles.

In both hadronic physics and atomic physics, it hasn't yet been definitely established theoretically if there are "islands of stability" more massive than the current most heavy observed hadrons and atomic isotopes. In the same vein, we have not calculated theoretically the largest possible number of valence quarks that are possible in a strong force bound hadron.

The internal structure of maybe half a dozen or a dozen even parity scalar bosons and axial vector bosons has not yet been worked out.

If we could use first principles calculations to "reverse engineer" observed data to obtain the physical constants necessary to produce the observed particles, this could really move forward the first set of the agenda to measure fundamental constants, and it could also confirm what we already strongly believe, which is that all of the physics necessary to explain, for example, chemistry scale phenomena from the Standard Model, is already known.

Quantum computing which is just around the corner could make these problems which we have known in theory how to calculated for decades, but which have been mathematically intractable to computer, finally possible to solve.